A lot

of the squee I've heard about the sequencing of the human genome and

the ever dropping cost to completely sequence a single genome has

been in the nature of "we're figuring out how nature programs

biology!" This is true to a point, but the reality of programming new

life or new functions of life is far, far in the future. Yes, we've

already created artificial life, but it wasn't done with full

understanding of the source code we used; we took the code that

governs functions we wanted and fitted them together.

Biology

is in some ways like computers in that there is a (presumably)

deterministic process that governs the rules of how it works. It

exists in a fundamentally chaotic environment, which makes extracting

that determinism pretty hard. But we're sure there is a causal chain

for most anything, if only we look hard enough. For computers we know

it all end to end, we wrote the things so we should, but are only now

getting to the levels of complexity in these systems where they can

mimic non-deterministic behavior. But if we dig down into the failure

analysis we can isolate root and associated causes of the failure chain. We want to

do that with biology.

We

are far, far away from doing that.

Biology

up until the genomics 'revolution' has been in large part describing

the function of things. Our ability to stick probes in places has

improved over time, which in turn has increased our understanding of

how biology interacts with the environment at large. We've even done

large scale changes to organisms to see how they behave under faulty

conditions, just so we can better figure out how they work. Classic

reverse-engineering, in other words. You'd think having access to the

source code would make it go much easier. But... not really.

Lets

take an example, a hand-held GPS unit. This relatively simple device

should be easy to reverse engineer. It has a simple function, provide

precise location. It has some ancillary functions such as provide

accurate time, and give a map of the surroundings. Ok.

After

detailed analysis of this device we can derive many things:

- It

uses radio waves of a specific wavelength set to receive signals.

- Those

signals are broadcast by a constellation of satellites, and it has

to receive signal from no less than three of them before it can do

so.

- The

time provided is very stable, though if it doesn't receive signals

from the satellites it will drift at a mostly predictable rate.

- The

bits that receive the satellite signal, since it doesn't work if

they're removed.

- Where

the maps are stored, since removal of that bit causes it to not have

any.

- A

whole variety of ways to electrically break the gizmo.

- How

it seems to work electrically.

Additionally,

we can infer a few more things

- The

probable orbits of the satellites themselves.

- The

math used to generate position.

- The

existence of an authoritative time-source.

Nifty

stuff. What does the equivalent of 'genomics' give us? It gives us

the raw machine code that runs the device itself. Keep in mind that

we also don't know what each instruction does, and don't yet have

high confidence in our ability to discriminate between instructions.

And most importantly, we don't know the features of the

instruction-set architecture. There is a LOT more work to do before

we can make the top-level functional analysis meet up with the

bottom-level instructional analysis. Once we do join up, we should

be able to understand how it fundamentally works.

But

in the mean time we have to reverse-engineer the ISA, the processor

architecture itself, the signal processing algorithms (which may be

very different than we inferred with the functional analysis), how

the device tolerates transient variability in the environment, how it

uses data storage, and other such interesting things. There is a LOT

of work ahead.

Biology

is a lot harder, in no small part because it has built up over

billions of years and the same kinds of problems have been solved any

number of ways. What's more, there is enough error tolerance in the

system that you have to do a lot of correlational work before you can

identify what's signal and what's noise. Environment also plays a

very key role, which is most vexing as environment is fundamentally

chaotic and can not be 100% controlled for.

We're

learning that a significant part of our genome is dedicated to

surviving faulty instructions in our genetic code, and we hadn't

realized they were there before. We're learning ever more interesting

ways that faults can change the effect of code. We're learning that

the mechanics we had presumed existed for code implementation are in

fact wrong in small but significant ways. The work continues.

We

may have the machine-code of life, but it is not broken down into

handy functions like CreateRetina(). Something like that would be

source-code, and is far more useful to us systemizing hominids. We

may get there, but we're not even close yet.

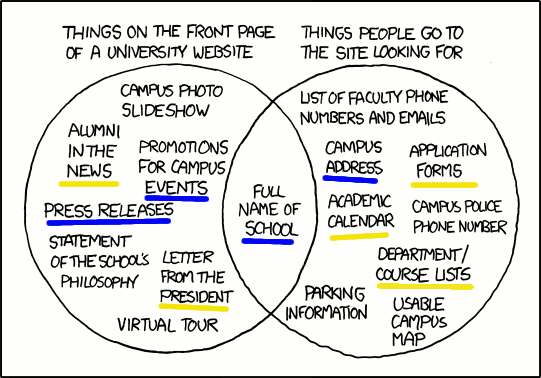

The blue underlines are items that are on our front page, the gold underlines are items that are linked directly from the front page. I happen to know you can get to some of the other items directly from the front page, but they're not labeled in any way that you'd ever expect to get there from the top. I marked those in psychic white.

The blue underlines are items that are on our front page, the gold underlines are items that are linked directly from the front page. I happen to know you can get to some of the other items directly from the front page, but they're not labeled in any way that you'd ever expect to get there from the top. I marked those in psychic white.