Saturday, January 16, 2010

The things you learn

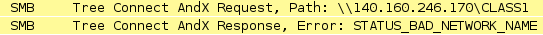

The presentation of it at the packet-level was pretty specific, though. XP clients (and Samba clients) would get to the second step of the connection setup process for mapping a drive and time out.

- -> Syn

- <- Syn/Ack

- -> NBSS, Session Request, to $Server<20> from $Client<00>

- <- NBSS, Positive Session Response

- -> SMB, Negotiate Protocol Request

- <- Ack

- [70+ seconds pass]

- -> FIN

- <- FIN/Ack

In the end it took a call to Microsoft. Once we got to the right network person, they knew immediately what the problem was.

ForeFront is now going on those servers. It really should have been on a month ago, but because these cluster nodes were supposed to go live for fall quarter they were fully staged up in August, before we even had the ForeFront clients. We never remembered to replaced SEP with ForeFront.

Labels: clustering, microsoft

Wednesday, September 30, 2009

I have a degree in this stuff

- A career in academics

- A career in programming

Anyway. Every so often I stumble across something that causes me to go Ooo! ooo! over the sheer computer science of it. Yesterday I stumbled across Barrelfish, and this paper. If I weren't sick today I'd have finished it, but even as far as I've gotten into it I can see the implications of what they're trying to do.

The core concept behind the Barrelfish operating system is to assume that each computing core does not share memory and has access to some kind of message passing architecture. This has the side effect of having each computing core running its own kernel, which is why they're calling Barrelfish a 'multikernel operating system'. In essence, they're treating the insides of your computer like the distributed network that it is, and using already existing distributed computing methods to improve it. The type of multi-core we're doing now, SMP, ccNUMA, uses shared memory techniques rather than message passing, and it seems that this doesn't scale as far as message passing does once core counts go higher.

They go into a lot more detail in the paper about why this is. A big one is hetergenaity of CPU architectures out there in the marketplace, and they're not just talking just AMD vs Intel vs CUDA, this is also Core vs Core2 vs Nehalem. This heterogenaity in the marketplace makes it very hard for a traditional Operating System to be optimized for a specific platform.

A multikernel OS would use a discrete kernel for each microarcitecture. These kernels would communicate with each other using OS-standardized message passing protocols. On top of these microkernels would be created the abstraction called an Operating System upon which applications would run. Due to the modularity at the base of it, it would take much less effort to provide an optimized microkernel for a new microarcitecture.

The use of message passing is very interesting to me. Back in college, parallel computing was my main focus. I ended up not pursuing that area of study in large part because I was a strictly C student in math, parallel computing was a largely academic endeavor when I graduated, and you needed to be at least a B student in math to hack it in grad school. It still fired my imagination, and there was squee when the Pentium Pro was released and you could do 2 CPU multiprocessing.

In my Databases class, we were tasked with creating a database-like thingy in code and to write a paper on it. It was up to us what we did with it. Having just finished my Parallel Computing class, I decided to investigate distributed databases. So I exercised the PVM extensions we had on our compilers thanks to that class. I then used the six Unix machines I had access to at the time to create a 6-node distributed database. I used statically defined tables and queries since I didn't have time to build a table parser or query processor and needed to get it working so I could do some tests on how optimization of table positioning impacted performance.

Looking back on it 14 years later (eek) I can see some serious faults about my implementation. But then, I've spent the last... 12 years working with a distributed database in the form of Novell's NDS and later eDirectory. At the time I was doing this project, Novell was actively developing the first version of NDS. They had some problems with their implementation too.

My results were decidedly inconclusive. There was a noise factor in my data that I was not able to isolate and managed to drown out what differences there were between my optimized and non-optimized runs (in hindsight I needed larger tables by an order of magnitude or more). My analysis paper was largely an admission of failure. So when I got an A on the project I was confused enough I went to the professor and asked how this was possible. His response?

"Once I realized you got it working at all, that's when you earned the A. At that point the paper didn't matter."Dude. PVM is a message passing architecture, like most distributed systems. So yes, distributed systems are my thing. And they're talking about doing this on the motherboard! How cool is that?

Both Linux and Windows are adopting more message-passing architectures in their internal structures, as they scale better on highly parallel systems. In Linux this involved reducing the use of the Big Kernel Lock in anything possible, as invoking the BKL forces the kernel into single-threaded mode and that's not a good thing with, say, 16 cores. Windows 7 involves similar improvements. As more and more cores sneak into everyday computers, this becomes more of a problem.

An operating system working without the assumption of shared memory is a very different critter. Operating state has to be replicated to each core to facilitate correct functioning, you can't rely on a common memory address to handle this. It seems that the form of this state is key to performance, and is very sensitive to microarchitecture changes. What was good on a P4, may suck a lot on a Phenom II. The use of a per-core kernel allows the optimal structure to be used on each core, with changes replicated rather than shared which improves performance. More importantly, it'll still be performant 5 years after release assuming regular per-core kernel updates.

You'd also be able to use the 1.75GB of GDDR3 on your GeForce 295 as part of the operating system if you really wanted to! And some might.

I'd burble further, but I'm sick so not thinking straight. Definitely food for thought!

Friday, September 11, 2009

Mac OS X and Windows 2008 clusters

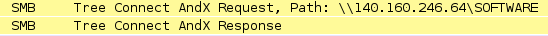

- OS X (except for 10.4) attempts to make smb/cifs connections by the resolved IP address of given names. So a connection string like smb://clu-share1.winclu.wwu.edu/share1/ will be translated into \\140.160.12.34\share1 when it attempts to talk to the server.

- Windows failover clustering requires the server name when connecting. Otherwise it tells you no-can-do. You can't use \\140.160.12.34\share1\ syntax, you MUST use a name.

However, if you attempt to connect to a non-clustered share, perhaps a share on one of the cluster nodes rather than a cluster service, it works just fine.

Funny, eh?

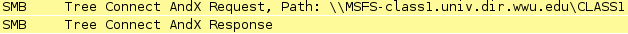

So what's a mac-owner, of which we have quite a lot, to do? The fix is pretty simple, append ":139" to the end of the server part of the connection string. In the above example, "smb://msfs-class1.univ.dir.wwu.edu:139/class1". For some reason, this forces the mac to use a name when connecting to the remote system.

Apparently, OS X 10.4 (Tiger) did this normally, but Apple changed it back to the non-working version with 10.5 (Leopard). And we've tested, 10.6 (Snow Leopard) is broken the same way.

Why this is so is up for debate. I'm personally fond of the idea that the Windows SMB stack isn't detailed enough to tell what IP address an incoming connection came in on and virtualize answers accordingly. For stand-alone servers this is a simple thing; if you can talk to me at all, here are all of my shares. For conditional sharing like with clusters, you can only get these shares on these IP's, the SMB stack apparently lacks a way to discriminate appropriately. Clearly name-based is in there, but not IP.

No word on if 2008 R2 behaves this way. Microsoft dropped R2 about... three weeks too late for us to go with it for this cluster.

This is going to be one of those FAQs the helpdesks are going to get real used to answering.

Labels: clustering

Wednesday, September 02, 2009

A clustered file-system, for windows?

http://blogs.msdn.com/clustering/archive/2009/03/02/9453288.aspx

On the surface it looks like NTFS behaving like OCFS. But Microsoft has a warning on this page:

In Windows Server® 2008 R2, the Cluster Shared Volumes feature included in failover clustering is only supported for use with the Hyper-V server role. The creation, reproduction, and storage of files on Cluster Shared Volumes that were not created for the Hyper-V role, including any user or application data stored under the ClusterStorage folder of the system drive on every node, are not supported and may result in unpredictable behavior, including data corruption or data loss on these shared volumes. Only files that are created for the Hyper-V role can be stored on Cluster Shared Volumes. An example of a file type that is created for the Hyper-V role is a Virtual Hard Disk (VHD) file.Before installing any software utility that might access files stored on Cluster Shared Volumes (for example, an antivirus or backup solution), review the documentation or check with the vendor to verify that the application or utility is compatible with Cluster Shared Volumes.So unlike OCFS2, this multi-mount NTFS is only for VM's and not for general file-serving. In theory you could use this in combination with Network Load Balancing to create a high-availability cluster with even higher uptime than failover clusters already provide. The devil is in the details though, and Microsoft aludes to them.

A file system being used for Hyper-V isn't a complex locking environment. You'll have as many locks as there are VHD files, and they won't change often. Contrast this with a file-server where you can have thousands of locks that change by the second. Additionally, unless you disable Opportunistic Locking you are at grave risk of corrupting files used by more than one user (Acess databases!) if you are using the multi-mount NTFS.

Microsoft will have to promote awareness of this type of file-system into the SMB layer before this can be used for file-sharing. SMB has its own lock layer, and this will have to coordinate the SMB layers in the other nodes for it to work right. This may never happen, we'll see.

Labels: clustering, linux, microsoft

Wednesday, April 22, 2009

A new version of BIND

Modularity:

...the selection of a variety of back-ends for data storage, be it the current in-memory database, a traditional SQL-based server, an embedded database engine or back-ends for specific applications such as a high performance, pre-compiled answer database.Which makes me think of eDirectory backed DNS. Novell has had this for ages with NetWare, and from what I recall it was based on BIND. But... BIND8. BIND10 would formalize this in the linux base, which would further allow Novell to publish a more 'pure' eDir-integrated BIND.

Clustering:

run on multiple but related systems simultaneously, using a pluggable, open-source architecture to enable backbone communications between individual members of the cluster. These coordination services would enable a server farm to maintain consistency and coherence.This is exactly what AD-integrated DNS and the DNS on NetWare has been doing for over 8 years now. Glad to see BIND catch up.

The big thing about using a database of some kind as the back-end for DNS is that you no longer have to create Secondary servers and muck about with Zone Transfers. For domains that change on a second by second basis, such as an AD DNS domain with dynamic updates enabled and thousands of computers during morning power-on, it is entirely possible for a BIND secondary-server to be missing many, many DNS updates. Microsoft has known about this issue, which is why they have their own directory-integrated DNS service.

This also shows just how creaky the NetWare DNS service really is. That's based on BIND8 code, which is now over 10 years old. Very creaky.

I'm looking forward to BIND10. It is a needed update that addresses DNS as it is done today, and would better enable BIND to handle large Active Directory domains.

Labels: clustering, linux, microsoft, netware, sysadmin

Wednesday, April 15, 2009

Windows 7 forces major change

The Vista client has been vexing in this regard since it is so painfully slow in our clustered environment. The reason it is slow is the same reason the first WinXP clients were slow, the Microsoft and Novell name-resolution processes conmpete in bad ways. As each drive letter we map is its own virtual-server, every time you attempt to display a Save/Open box or open Windows Explorer it has to resolve-timeout-resolve each and every drive letter. This means that opening a Save/Open box on a Vista machine running the Novell client can take upwards of 5 minutes to display thanks to the timeouts. Novell knows about this issue, and has reported it to Microsoft. This is something Microsoft has to fix, and they haven't yet.

This is vexing enough that certain highly influential managers want to make sure that the same thing doesn't happen again for Windows 7. As anyone who follows any piece of the tech media knows, Windows 7 has been deemed, "Vista done right," and we expect a lot faster uptake of Win7 than WinVista. So we need to make sure our network can accommodate that on release-day. Make it so, said the highly placed manager. Yessir, we said.

So last night I turned CIFS on for all the file services on the cluster. It was that or migrate our entire file-serving function to Windows. The choice, as you can expect, was an easy one.

This morning our Mac users have been decidedly gleeful, as CIFS has long password support where AFP didn't. The one sysadmin here in techservices running Vista as his primary desktop has uninstalled the Novell Client and is also cheerful. Happily for us, the directive from said highly placed manager was accompanied by a strong suggestion to all departments that domaining PCs into the AD domain would be a Really Good Idea. This allows us to use the AD login-script, as well as group-policies, for those Windows machines that lack a Novell Client.

Ultimately, I expect the Novell Client to slowly fade away as a mandatory install. So that clientless-future I said we couldn't take part in? Microsoft managed to push us there.

Labels: clustering, microsoft, netware, novell, sysadmin

Wednesday, February 04, 2009

The mystery of lsimpe.cdm

What's more, the two Windows backup-to-disk servers we've attached to the EVA4400 (and the MSA1500 for that matter) have the HP MPIO drivers installed, which are extensions of the Microsoft MPIO stack. Looking at the bandwidth chart on the fiber-channel fabric I see that these Windows servers are also doing load balancing over both of the paths. This is nifty! Also, when I last updated the XCS code on the EVA4400 both of those servers didn't even notice the controller reboots. EVEN NICER!

I want to do the same thing with NetWare. On the surface, turning on MPIO support is dead easy:

Startup.ncf file:

SET MULTI-PATH SUPPORT = ON

LOAD QL2X00.HAM SLOT=10001 /LUNS /ALLPATHS /PORTNAMESTada. Reboot, present both paths in your zoning, and issue the "list failover devices" command on the console, and you'll get a list. In theory should one go away, it'll seamlessly move over to the other.

But what it won't do is load-balance. Unfortunately, the documentation on NetWare's multi-path support is rather scanty, focusing more on configuring path failover priority. The fact that the QL2X00.HAM driver itself can do it all on its own without letting NetWare know (the "allpaths" and "portnames" options tell it to not do that and let NetWare do the work) is a strong hint that MPIO is a fairly light weight protocol.

On the support forums you'll get several references to the LSIMPE.CDM file. With interesting phrases like, "that's the multipath driver", and, "Yeah, it isn't well documented." The data on the file itself is scanty, but suggestive:

LSIMPE.CDMBut the exact details of what it does remain unclear. One thing I do know, it doesn't do the load-balancing trick.

Loaded from [C:\NWSERVER\DRIVERS\] on Feb 4, 2009 3:32:13 am

(Address Space = OS)

LSI Multipath Enhancer

Version 1.02.02 September 5, 2006

Copyright 1989-2006 Novell, Inc.

Labels: clustering, netware, novell, storage

Monday, October 20, 2008

Dorm printing

So you have printers that students in the dorms can print to? Wow. Do you audit all those and charge the numbers of pages against the student?The answer to that is that we make big use of AND Technology's PCounter product. When paired with their PrintStations, it makes a very nice way to put a lid on unrestricted 'free' printing in the dorms. The PrintStations also make sure that only jobs people want to pick up get printed, which saves a serious amount of paper.

PCounter is core to our student printing. We'll only move our NDPS/iPrint infrastructure over to OES2-linux when Pcounter is supported on that platform, not before. We'll keep a 2 node NetWare cluster around just for printing if we have to. Since accounting support is one of the features that's supposed to be in OES2-SP1, it is my hope that PCounter will support OES2-Linux within a year after SP1's release. But I haven't heard any specifics.

Friday, September 19, 2008

Moving storage around

Blackboard needs more space on both the SQL server and the Content server, and as the Content server is clustered it'll require an outage to manage the increase. And it'll be a long outage, as 300GB of weensy files takes a LONG time to copy. The SQL server uses plain old Basic partitions, so I don't think we can expand that partition, so we may have to do another full LUN copy which will require an outage. That has yet to be scheduled, but needs to happen before we get through much of the quarter.

Over on the EVA4400 side, I'm evacuating data off of the MSA1500cs onto the 4400. Once I'm done with that, I'm going to be:

- Rebuilding all of the Disk Arrays.

- Creating LUNs expressly for Backup-to-Disk functionality.

- Flashing the Active/Active firmware on to it, the 7.00 firmware rev.

- Get the two Backup servers installed with the right MPIO widgetry to take advantage of active/active on the MSA>

What has yet to be fully determined is exactly how we're going to use the 4400 in this scheme. I expect to get between 15-20TB of space out of the MSA once I'm done with it, and we have around 20TB on the 4400 for backup. Which is why I'd really like that 40TB license please.

Going Active/Active should do really good things for how fast the MSA can throw data at disk. As I've proven before the MSA is significantly CPU bound for I/O to parity LUNs (Raid5 and Raid6), so having another CPU in the loop should increase write throughput significantly. We couldn't do Active/Active before since you can only do Active/Active in a homogeneous OS environment, and we had Windows and NetWare pointed at the MSA (plus one non-production Linux box).

In the mean time, I watch progress bars. TB of data takes a long time to copy if you're not doing it at the block level. Which I can't.

Labels: backup, clustering, msa, storage, sysadmin

Sunday, September 14, 2008

EVA6100 upgrade a success

There was only one major hitch for the night, which meant I got to bed around 6am Saturday morning instead of 4am.

For EVA, and probably all storage systems, you present hosts to them and selectively present LUNs to those hosts. These host-settings need to have an OS configured for them, since each operating system has its own quirks for how it likes to see its storage. While the EVA6100 has a setting for 'vmware', the EVA3000 did not. Therefore, we had to use a 'custom' OS setting and a 16 digit hex string we copied off of some HP knowledge-base article. When we migrated to the EVA6100 it kept these custom settings.

Which, it would seem, don't work for the EVA6100. It caused ESX to whine in such a way that no VMs would load. It got very worrying for a while there, but thanks to an article on vmware's support site and some intuition we got it all back without data loss. I'll probably post what happened and what we did to fix it in another blog post.

The only service that didn't come up right was secure IMAP for Exchange. I don't know why it decided to not load. My only theory is that our startup sequence wasn't right. Rebooting the HubCA servers got it back.

Labels: clustering, exchange, hp, storage, sysadmin

Wednesday, September 10, 2008

That darned budget

It has been a common complaint amongst my co-workers that WWU wants enterprise level service for a SOHO budget. Especially for the Win/Novell environments. Our Solaris stuff is tied in closely to our ERP product, SCT Banner, and that gets big budget every 5 years to replace. We really need the same kind of thing for the Win/Novell side of the house, such as this disk-array replacement project we're doing right now.

The new EVAs are being paid for by Student Tech Fee, and not out of a general budget request. This is not how these devices should be funded, since the scope of this array is much wider than just student-related features. Unfortunately, STF is the only way we could get them funded, and we desperately need the new arrays. Without the new arrays, student service would be significantly impacted over the next fiscal year.

The problem is that the EVA3000 contains between 40-45% directly student-related storage. The other 55-60% is Fac/Staff storage. And yet, the EVA3000 was paid for by STF funds in 2003. Huh.

The summer of 2007 saw a Banner Upgrade Project, when the servers that support SCT Banner were upgraded. This was a quarter million dollar project and it happens every 5 years. They also got a disk-array upgrade to a pair of StorageTek (SUN, remember) arrays, DR replicated between our building and the DR site in Bond Hall. I believe they're using Solaris-level replication rather than Array-level replication.

The disk-array upgrade we're doing now got through the President's office just before the boom went down on big expensive purchases. It languished in the Purchasing department due to summer-vacation related under-staffing. I hate to think how late it would have gone had it been subjected to the added paperwork we now have to go through for any purchase over $1000. Under no circumstances could we have done it before Fall quarter. Which would have been bad, since we were too short to deal with the expected growth of storage for Fall quarter.

Now that we're going deep into the land of VMWare ESX, centralized storage-arrays are line of business. Without the STF funded arrays, we'd be stuck with "Departmental" and "Entry-level" arrays such as the much maligned MSA1500, or building our own iSCSI SAN from component parts (a DL385, with 2x 4-channel SmartArray controller cards, 8x MSA70 drive enclosures, running NetWare or Linux as an iSCSI target, with bonded GigE ports for throughput). Which would blow chunks. As it is, we're still stuck using SATA drives for certain 'online' uses, such as a pair of volumes on our NetWare cluster that are low usage but big consumers of space. Such systems are not designed for the workloads we'd have to subject them to, and are very poor performers when doing things like LUN expansions.

The EVA is exactly what we need to do what we're already doing for high-availability computing, yet is always treated as an exceptional budget request when it comes time to do anything big with it. Since these things are hella expensive, the budgetary powers-that-be balk at approving them and like to defer them for a year or two. We asked for a replacement EVA in time for last year's academic year, but the general-budget request got denied. For this year we went, IIRC, both with general-fund and STF proposals. The general fund got denied, but STF approved it. This needs to change.

By October, every person between and Governor Gregoir will be new. My boss is retiring in October. My grandboss was replaced last year, my great grand boss also has been replaced in the last year, and the University President stepped down on September 1st. Perhaps the new people will have a broader perspective on things and might permit the budget priorities to be realigned to the point that our disk-arrays are classified as the critical line-of-business investments they are.

Labels: budget, clustering, exchange, hp, linux, msa, netware, novell, OES, opinion, storage, sysadmin, virtualization

Wednesday, August 27, 2008

Woot!

There was some fear that the gear wouldn't get here in time for the 9/5 window. The 9/12 window has one of the key, key people needed to handle the migration in Las Vegas for VMWorld, and he won't be back until 9/21 which also screws with the 9/19 window. The 9/19 window is our last choice, since that weekend is move-in weekend and the outage will be vastly more noticeable with students around. Being able to make the 9/5 window is great! We need these so badly that if we didn't get the gear in time, we'd have probably done it 9/12 even without said key player.

The one hitch is if HP can't do 9/5-6 for some reason. Fret. Fret.

Labels: clustering, storage, sysadmin, virtualization

Monday, August 25, 2008

Dynamic partitions in Server 2008 and Cluster

Labels: clustering, microsoft, opinion, storage, sysadmin

Tuesday, March 25, 2008

IPv6 vs IPX

How may of you in the room have been at this long enough to do IPX? Ok, great. Now how many of you have done anything with IPv6? Doesn't that look JUST like IPX?And he's right, to a point. IPX addresses are of the form network-number:node-number, such as:

00008021:0002a540d0e1

Where 'node number' is the MAC address of the network card in question. It's up to the routers to figure out where network-numbers live, and advertised services issue full-network broadcasts to advertise said service, which is the primary reason that IPX just doesn't scale if WAN links are in the mix. But that's by the by.

IPv6 addresses work similarly:

2001:0db8:85a3:08d3:1319:8a2e:0370:7334

The last 48 bits are the MAC address and the bits ahead of it constitute the network number. Except... the IPv6 designers knew about the failings of IPX and worked around them. The last 48 bits don't have to be the MAC address, though as I understand it that address has to exist for each physical interface. Unlike IPX, IPv6 has the ability to have 'secondary' addresses. The lack of this ability was the main reason that Novell Cluster Services only worked on IP networks, which caused its own wave of grief when clustering was introduced in the NetWare 5.1 era. Secondary IPv6 numbers don't have to follow the MAC format, which in my opinion is a good thing!

Yes, when I first read about IPv6 addressing I had that same, "wow, this is just like IPX," moment the BrainShare presenter had. Only, more scalable, and more flexible.

Labels: brainshare, clustering, netware, novell, sysadmin

Thursday, March 20, 2008

BrainShare Thursday

- Novell Open Enterprise Server 2 Interoperability with Windows and AD. All about Domain Services for Windows and Samba. Neither of which we'll ever use. No idea why I wanted to be in this session.

- Rapid Deployment of ZENworks Configuration Management. Other people around here have suggested that if we haven't moved yet, wait until at least SP3 before moving. If then. So, demotivated. Plus I was rather tired.

- Configuring Samba on OES2. CIFS will do what we need, I don't need Samba. Don't need this one. Skipped.

BASH tips and tricks. I got a lot out of it, but the developers around me were quietly derisive.

ZEN Overview and Features

Not so much with the futures, but it did explain Novell's overall ZEN strategy. It isn't a coincidence that most of Novell's recent purchases have been for ZEN products.

TUT303: OES2 Clusters, from beginning to extremes

This was great. They had a full demo rig, and they showed quite a bit in it. Including using Novell Cluster Services to migrate Xen VM's around. They STRONGLY recommended using AutoYast to set up your cluster nodes to ensure they are simply identical except for the bits you explicitly want different (hostname, IP). And also something else I've heard before, you want one LUN for each NSS Pool. Really. Plus, the presenters were rather funny. A nice cap for the day.

And tonight, Meet the Experts!

Labels: brainshare, clustering, linux, novell, NSS, OES, storage, zenworks

Monday, March 17, 2008

Today at Brainshare

Breakfast was uninspired. As per usual, the hashbrowns had cooled to a gellid mass before I found everything and got a seat.

The Monday keynotes are always the CxO talks about strategy and where we're going. Today a mess of press releases from Novell give a good idea what the talks were about. Hovsepian was first, of course, and was actually funny. He gave some interesting tid-bits of knowledge.

- Novell's group of partners is growing, adding a couple hundred new ones since last year. This shows the Novell 'ecosystem' is strong.

- 8700 new customers last year

- Novell press mentions are now only 5% negative.

- High Capacity Computing

- Policy Engines

- Orchestration

- Convergence

- Mobility

Another thing he mentioned several times in association with Fossa and agility, is mergers and acquisitions. This is not something us Higher Ed types ever have to deal with, but it is an area in .COM land that requires a certain amount of IT agility to accommodate successfully. He mentioned this several times, which suggests that this strategy is aimed squarely at for-profit industry.

Also, SAP has apparently selected SLES as their primary platform for the SMB products.

Pat Hume from SAP also spoke. But as we're on Banner, and it'll take a sub-megaton nuclear strike to get us off of it, I didn't pay attention and used the time to send some emails.

Oh, and Honeywell? They're here because they have hardware that works with IDM. That way the same ID you use for your desktop login can be tied to the RFID card in your pocket that gets you into the datacenter. Spiffy.

ATT375 Advanced Tips & Tricks for Troubleshooting eDir 8.8

A nice session. Hard to summarize. That said, they needed more time as the Laptops with VMWare weren't fast enough for us to get through many of the exercises. They also showed us some nifty iMonitor tricks. And where the high-yield shoot-your-foot-off weapons are kept.

BUS202 Migrating a NetWare Cluster to OES2

Not a good session. The presenter had a short slide deck, and didn't really present anything new to me other than areas where other people have made major mistakes. And to PLAN on having one of the linux migrations go all lost-data on you. He recommended SAN snapshots. It shortly digressed into "Migrating a NetWare Cluster to Linux HA", which is a different session all together. So I left.

TUT215 Integrating Macintosh with Novell

A very good session. The CIO of Novell Canada was presenting it, and he is a skilled speaker. Apparently Novell has written a new AFP stack from scratch for OES2 Sp1, since NETATALK is comparatively dog slow. And, it seems, the AFP stack is currently out performing the NCP stack on OES2 SP1. Whoa! Also, the Banzai GroupWise client for Mac is apparently gorgeous. He also spent quite a long time (18 minutes) on the Kanaka client from Condrey Consulting. The guy who wrote that client was in the back of the room and answered some questions.

Labels: brainshare, clustering, netware, novell, OES, sysadmin, virtualization

Thursday, February 14, 2008

OES2-SP1 soon to be in closed beta

"What is in this release of Open Enterprise ServerNovell Open Enterprise Server 2 Support Pack 1 refreshes the SUSE Linux Enterprise Server 10 distribution with SLES10 SP2, fixes defects found since the release of OES2 and also adds in the following functionality:

- Novell engineered CIFS and AFP protocols

- New version of iFolder (3.7)

- Updated iPrint with an accounting API

- 64-bit version of eDirectory

- Enhanced migration tools and migration GUI

- Improved performance of the XEN hypervisor

- Domain Services for Windows

- NetWare 6.5 Support Pack 8

Note that although Domain Services for Windows is part of OES2 SP1, a separate beta program will be run in order to collate DSfW feedback."

Novell engineered CIFS? I soooo want to know what that is. Is is a completely new CIFS stack, or is it Samba with Novell extensions whacked on? I want to know! The other important bit of information:

The beta test program is currently scheduled to begin mid March and run through October.Which means there won't be product for my 2008 upgrade window. Fie. Well, at least we'll have ample time to prototype and test for the 2009 upgrade window.

Update 9/2008: Novell has posted on their beta site that a public beta is 'coming soon'.

Update 10/2008: The public beta for OES2 SP1 has been posted.

Labels: clustering, netware, novell, OES

Wednesday, January 16, 2008

NetWare library patches

This just caused me a problem. It turns out that if you have libcsp6b (the LibC patch) applied and not nwlib6k (the CLib patch), there is an abend possibility. It happened yesterday. It turns out that in that case, a badly formed network broadcast can cause an abend. This caused three of my six cluster nodes to fall on their butts at the same time. That was fun. Strange (but good) thing is, I had already applied both patches to these three servers but hadn't gotten around to rebooting them yet. So, by killing themselves they actually fixed the problem.

The abend, key details:

EIP in SERVER.NLM at code start +0015FD27hHeh heh heh. Oops.

And now a bit of history. Long time NetWare admins can ignore this part.

Q: Why are there two C libraries?

CLIB is the library NetWare started with. It began life in the dark and misty past, probably in the late 1980's. It is the deepest, darkest bowels of NetWare from the era when Novell was it when it came to office networking. Being so old, its APIs are very mature. Applications developed against CLIB generally speaking just plain work.

CLIB is also depreciated since it is highly proprietary, and doesn't play well with others. "Just plain works" in this instance means an assumption of 8.3 names, with kludging to support long file names if at all possible. CLIB applications have a tendency to have IPX dependencies for no good reason.

LIBC was created, IIRC, around the release of NetWare 5.0 when it became possible for NetWare to operate in a "pure IP" environment. LIBC was designed with the concept of POSIX semantics in mind, which CLIB was not. LIBC was created from scratch with long file name support. By now, as of NetWare 6.5 SP7, most of the NetWare kernel is written against LIBC rather than CLIB.

As an example of LIBC vs CLIB, take the 'MyWeb' service this blog is served by. When I did this the first time, it was on NetWare 6.0, using Apache 1.3. Apache 1.3 was linked against CLIB and was very stable. The service notes for the Apache Modules I needed to run to make it work made it clear that supporting long file-names on remote servers was something that only recently started working.

When the migration to NetWare 6.5 came around, it meant I had to migrate MyWeb to Apache 2.0. Apache 2.0 is linked against LIBC and used a different apache module to make things work. I had troubles. The LibC functions were not nearly as mature as their CLIB counterparts, and it showed. 3.5 years later things are now a lot more stable then back then.

Labels: clustering, netware, novell, sysadmin

Friday, November 30, 2007

OES2 SP1 timing

Domain Services for Windows, which is scheduled to ship with OES 2 SP1 (currently scheduled for late 2008), will also offer some clear advantages."Late 2008" means they WILL NOT have SP1 out by August of 2008. This means that the upgrade of our 6 node cluster to OES will have to wait until 2009. Grrarrr!

Another 21 months of a 32-bit operating system on the single biggest storage consumer on campus. We'll have at least one hardware refresh before then for some of the nodes, and... boy I hope they have NetWare drivers for that. The very limited testing I did with NetWare-in-Xen was not encouraging from a performance stand-point. If it looks like I'll have to deploy that way for the next servers we get in the cluster, I'll have to do more real testing to characterize the performance hit (if any). The idea of a 64-bit memory space for file-caching makes me drool. Not getting it for 21 months is painful.

That said, if Novell releases the eDirectory enabled AFP server for OES2-Linux outside of the service-pack I could still make the 2008 window. That's our only dependency for SP1.

Update (09/08/08): Looks like 'late October' is the date for SP1's release. Should be in public beta before then.

Update (12/03/08): It's out!

Wednesday, November 28, 2007

I/O starvation on NetWare, HP update

This morning I got a voice-mail from HP, an update for our case. Greatly summarized:

The MSA team has determined that your device is working perfectly, and can find no defects. They've referred the case to the NetWare software team.Or...

Working as designed. Fix your software. Talk to Novell.Which I'm doing. Now to see if I can light a fire on the back-channels, or if we've just made HP admit that these sorts of command latencies are part of the design and need to be engineered around in software. Highly frustrating.

Especially since I don't think I've made back-line on the Novell case yet. They're involved, but I haven't been referred to a new support engineer yet.

Labels: clustering, hp, msa, netware, novell, OES, storage, sysadmin

Wednesday, November 21, 2007

I/O starvation on NetWare

This is a problem with our cluster nodes. Our cluster nodes can seen LUNs on both the MSA1500cs and the EVA3000. The EVA is where the cluster has been housed since it started, and the MSA has taken up two low-I/O-volume volumes to make space on the EVA.

IF the MSA is in the high Avg Command Latency state, and

IF a cluster node is doing a large Write to the MSA (such as a DVD ISO image, or B2D operation),

THEN "Concurrent Disk Requests" in Monitor go north of 1000

This is a dangerous state. If this particular cluster node is housing some higher trafficked volumes, such as FacShare:, the laggy I/O is competing with regular (fast) I/O to the EVA. If this sort of mostly-Read I/O is concurrent with the above heavy Write situation it can cause the cluster node to not write to the Cluster Partition on time and trigger a poison-pill from the Split Brain Detector. In short, the storage heart-beat to the EVA (where the Cluster Partition lives) gets starved out in the face of all the writes to the laggy MSA.

Users definitely noticed when the cluster node was in such a heavy usage state. Writes and Reads took a loooong time on the LUNs hosted on the fast EVA. Our help-desk recorded several "unable to map drive" calls when the nodes were in that state, simply because a drive-mapping involves I/O and the server was too busy to do it in the scant seconds it normally does.

This is sub-optimal. This also doesn't seem to happen on Windows, but I'm not sure of that.

This is something that a very new feature in the Linux kernel could help out, that that's to introduce the concept of 'priority I/O' to the storage stack. I/O with a high priority, such as cluster heart-beats, gets serviced faster than I/O of regular priority. That could prevent SBD abends. Unfortunately, as the NetWare kernel is no longer under development and just under Maintenance, this is not likely to be ported to NetWare.

I/O starvation. This shouldn't happen, but neither should 270ms I/O command latencies.

Labels: clustering, hp, msa, netware, novell, OES, storage, sysadmin

Tuesday, September 18, 2007

OES2: clustering

Another thing about speeds, now that I have some data to play with. I copied a bunch of user directory data over to the shared LUN. It's a piddly 10GB LUN so it filled quick. That's OK, it should give me some ideas of transfer times. Doing a TSATEST backup from one cluster-node to the other (i.e. inside the Xen bridge) gave me speeds on the order of 1000MB/Min. Doing a TSATEST backup from a server in our production tree to the cluster node (i.e. over the LAN) gave me speeds of about 350MB/Min. Not so good.

For comparison, doing a TSATEST backup from the same host only drawing data from one of the USER volumes on the EVA (highly fragmented, but must faster, storage) gives a rate of 550 MB/Min.

I also discovered the VAST DIFFERENCES between our production eDirectory tree, which has been in existence since 1995 if the creation timestamp on the tree object is to be believed, and the brand new eDir 8.8 tree the OES2 cluster is living in. We have a heckova lot more attributes and classes in the prod tree than in this new one. Whoa. It made for some interesting challenges when importing users into it.

Labels: clustering, netware, novell, OES, virtualization

OES2-beta progress

I haven't gotten very far in my testing, but a few things are showing. I managed to do a TSATEST-based throughput run of a backup of SYS. That's about a gig of data. Throughputs for just one stream to one of the servers was around 500 MB/min, which is passible and within the realm of real performance for slower hardware. The downside of that is that the CPU reported by "xm top" was around 45%, where the CPU reported in MONITOR was closer to 25%. That's way higher than I expected, but could be related to all the disk I/O ops. This I/O was to a file in the file-system, not a physical device like a LUN on the SAN (that comes later).

Now I'm trying to get Novell Cluster Services installed. I want to get a weensy 2-node cluster set up to prove that it can be done. I suspect it can, but actually seeing it will be very nice.

Labels: clustering, netware, novell, OES

Monday, July 09, 2007

More fun OES2 tricks

Lets say you want to create a cluster mirror of a 2-node cluster for disaster recovery purposes. This will need at least four servers to set up. You have shared storage for both cluster pairs. So far so good.

Create the four servers as OES2-Linux servers. Set up the shared storage as needed so everything can see what it should in each site. Use DRBD to create new block-devices that'll be mirrored between the cluster pairs. Then set up NetWare-in-VM on each server, using the DRBD block-devices as the Cluster disk devices. You could even do SYS: on the DRBD block-devices if you want a true cluster-clone. That way when disk I/O happens on the clustered resources it gets replicated asynchronously to the DR site; unlike software RAID1 the I/O is considered committed when it hits local storage, SW RAID1 only considers writes committed when all mirrored LUNs report the commit.

Then, if the primary site ever dies, you can bring up an exact replica of the primary cluster, only on the secondary cluster pair. Key details like how to get the same network in both locations I leave as an exercise for the Cisco engineers. But still, an interesting idea.

Labels: clustering, netware, novell, OES, virtualization

Thursday, March 22, 2007

TUT 202: NetWare cluster migrations to LInux clusters

- On linux, cluster nodes are added through YaST

- 120 bytes of meta-data per file on NSS

- iPrint volumes could go on non-NSS volumes

- ext3 on OES2 is indexed, not indexed on OES1. Problem for larger directories.

- Novell Server Consolidation and Migration Tool can migrate Netware to Linux

- While running in mixed mode, can not extend or create NSS pools. Reboots all around to make this take.

- In mixed mode, trustee modifications do NOT transfer to the other OS. Migrate your NetWare volumes to OES-Linux, and leave them there!

- In OES-Linux, trustees are kept in a file, not in the file-system.

- In mixed mode, cluster load/unload scripts are kept in /etc/opt/novell/ncs/

- When out of mixed mode, scripts are promoted into edir

- Cluster licenses are not checked in OES-linux, but still 'count' come audit time. So have them.

- The 'cluster convert' command ends mixed-mode operation

- Clustering inside VMWare ESX server: only 2-node Microsoft clusters are supported. All others are not.

Labels: brainshare, clustering, netware, novell, OES

Thursday, September 21, 2006

The pro/con of clustering

On the topic of clusters, do you find the benefits of a cluster/SAN setup out weighed by the increased complication in node upgrades/patching and the "all your eggs in one basket" when it comes to storage on the SAN.One of the biggest things to get used to with clustering is that your uptimes for your cluster nodes will go down dramatically from what you're used to with your existing mainline servers, but your service uptimes will go up. Once we put in the cluster we haven't had an unplanned multi-hour outage that wasn't attributable to network issues. The key here is 'unplanned'. We've had several planned outages for both service-packing and actual hardware upgrades to the SAN array itself.

Prior to the cluster WWU put in three 'facstaff' servers and three 'student' servers to handle user directories and shared directories. This way when one server died, only a third of that class of user was out of luck. The cluster still follows this design for the user directories, but that's more for load-balancing between the cluster nodes than disaster resiliance. Since the cluster went in we've merged all of our facstaff shared volumes into a single volume. This was done because we were getting more and more cases of departments needing access to both Share1 and Share3, and we didn't have drive letters for that.

Patching and service-packing the cluster is easier than it would be with stand-alone servers. I can script things so that three of our six cluster nodes vacate themselves from the cluster in the middle of the night, so I can apply service-packs to them in the middle of the day. Repeat the same trick the next day. I can have a service-pack rolled out to the cluster in 48 hours with no after hours work on my part. THAT is a savings (unless you're counting on the overtime pay, which I don't get anyway).

The downside is the 'eggs in one basket' problem. If this building sinks into the Earth right now, WWU is screwed. Recovering from tape, after we get replacement hardware of course, would take close to a week. Don't think we haven't noticed this problem.

To be fair, though, we'd have this problem even if we were still on separate servers. True disaster recovery requires multi-location of data and services, which stand-alone servers also suffer from. Under the old architecture and presuming those servers were split between campus and our building, the 'building sinking into the ground' scenario would cause a significant portion of campus to stop working and a significant portion of students to lose everything for the days it'd take us to recover from tape. During that time WWU's teaching function would probably halt as, 'the Earth ate my homework,' would be a very valid excuse.

In our case losing a third or two thirds of all user-directory and shared-directory data would halt the business of the university. While the outage wouldn't be quite as severe as it would be if our SAN melted, it would be just as disruptive. Because of that, going for an 'all or nothing' solution that increases perceived uptime was very much in order.

We're in the process of trying to replicate our SAN data to a backup datacenter on campus. We can't afford Novell's Business Continuity Cluster which would provide automation to make this exact thing work. So we're having to make do on our own. We don't yet have a firm plan on how to make it work, and the 'fail back' plan is just a shaky; we only got the hardware for the backup SAN a month ago. It will happen, we just don't know what the final solution will look like.

As for iSCSI versus FibreChannel, my personal bias is for FC. However, I fully realize that gigabit ethernet is w-a-y cheaper than any FC solution out there today. I prefer FC because the bandwidth is higher and due to how it is designed I/O contention on the wire has less impact to overall performance. Just remember that iSCSI really really likes jumbo frames (MTU >1500 bytes), and not all router techs are OK with twiddling that; you may end up with a parallel and separate ethernet setup between your servers and the iSCSI storage.

As for iSCSI throughput, I haven't done tests on that. However I just got done looking at a whole bunch of throughput tests in and out of our FC SAN. During the IOZONE tests on NetWare, I recorded a high-water mark of 101 MB/s out of the EVA. This is 80% GigE speed, and therefore theoretically this transfer rate was doable over iSCSI. The true high-water mark was achieved by running IOZONE on the Linux server locally on the server, and on a cluster node running TSATEST on a locally mounted volume. At that time I saw a maximum transfer rate of 146 MB/s, which is 117% of GigE speed, so iSCSI wouldn't have been able to handle that. On the other hand, during day to day operations and during backups the transfer rate has never exceeded the 125 MB/s GigE mark. It's come close, but not exceeded it.

Tags: clustering

Labels: clustering