Monday, December 07, 2009

Account lockout policies

Since NDS was first released back at the dawn of the commercial internet (a.k.a. 1993) Novell's account lockout policies (known as Intruder Lockout) were set-able based on where the user's account existed in the tree. This was done per Organizational-Unit or Organization. In this way, users in .finance.users.tree could have a different policy than .facilities.users.tree. This was the case in 1993, and it is still the case in 2009.

Microsoft only got a hierarchical tree with Active Directory in 2000, and they didn't get around to making account lockout policies granular. For the most part, there is a single lockout policy for the entire domain with no exceptions. 'Administrator' is subjected to the same lockout as 'Joe User'. With Server 2008 Microsoft finally got some kind of granular policy capability in the form of "Fine Grained Password and Lockout Policies."

This is where our problem starts. You see, with the Novell system we'd set our account lockout policies to lock after 6 bad passwords in 30 minutes for most users. We kept our utility accounts in a spot where they weren't allowed to lock, but gave them really complex passwords to compensate (as they were all used programatically in some form, this was easy to do). That way the account used by our single-signon process couldn't get locked out and crash the SSO system. This worked well for us.

Then the decision was made to move to a true blue solution and we started to migrate policies to the AD side where possible. We set the lockout policy for everyone. And we started getting certain key utility accounts locked out on a regular basis. We then revised the GPOs driving the lockout policy, removing them from the Default Domain Policy, creating a new "ILO polcy" that we applied individually to each user container. This solved the lockout problem!

Since all three of us went to class for this 7-9 years ago, we'd forgotten that AD lockout policies are monolithic and only work when specified in Default Domain Policy. They do NOT work per-user the way they are in eDirectory. By doing it the way we did, no lockout policies were being applied anywhere. Googling on this gave me the page for the new Server 2008-era granular policies. Unfortunately for us, it requires the domain to be brought to the 2008 Functional Level, which we can't do quite yet.

What's interesting is a certain Microsoft document that suggested settings of 50 bad logins every 30 minutes as a way to avoid DoSing your needed accounts. That's way more that 6 every 30.

Getting the forest functional level raised just got more priority.

Labels: edir, microsoft, netware, novell, passwords, security, sysadmin

Wednesday, September 30, 2009

I have a degree in this stuff

- A career in academics

- A career in programming

Anyway. Every so often I stumble across something that causes me to go Ooo! ooo! over the sheer computer science of it. Yesterday I stumbled across Barrelfish, and this paper. If I weren't sick today I'd have finished it, but even as far as I've gotten into it I can see the implications of what they're trying to do.

The core concept behind the Barrelfish operating system is to assume that each computing core does not share memory and has access to some kind of message passing architecture. This has the side effect of having each computing core running its own kernel, which is why they're calling Barrelfish a 'multikernel operating system'. In essence, they're treating the insides of your computer like the distributed network that it is, and using already existing distributed computing methods to improve it. The type of multi-core we're doing now, SMP, ccNUMA, uses shared memory techniques rather than message passing, and it seems that this doesn't scale as far as message passing does once core counts go higher.

They go into a lot more detail in the paper about why this is. A big one is hetergenaity of CPU architectures out there in the marketplace, and they're not just talking just AMD vs Intel vs CUDA, this is also Core vs Core2 vs Nehalem. This heterogenaity in the marketplace makes it very hard for a traditional Operating System to be optimized for a specific platform.

A multikernel OS would use a discrete kernel for each microarcitecture. These kernels would communicate with each other using OS-standardized message passing protocols. On top of these microkernels would be created the abstraction called an Operating System upon which applications would run. Due to the modularity at the base of it, it would take much less effort to provide an optimized microkernel for a new microarcitecture.

The use of message passing is very interesting to me. Back in college, parallel computing was my main focus. I ended up not pursuing that area of study in large part because I was a strictly C student in math, parallel computing was a largely academic endeavor when I graduated, and you needed to be at least a B student in math to hack it in grad school. It still fired my imagination, and there was squee when the Pentium Pro was released and you could do 2 CPU multiprocessing.

In my Databases class, we were tasked with creating a database-like thingy in code and to write a paper on it. It was up to us what we did with it. Having just finished my Parallel Computing class, I decided to investigate distributed databases. So I exercised the PVM extensions we had on our compilers thanks to that class. I then used the six Unix machines I had access to at the time to create a 6-node distributed database. I used statically defined tables and queries since I didn't have time to build a table parser or query processor and needed to get it working so I could do some tests on how optimization of table positioning impacted performance.

Looking back on it 14 years later (eek) I can see some serious faults about my implementation. But then, I've spent the last... 12 years working with a distributed database in the form of Novell's NDS and later eDirectory. At the time I was doing this project, Novell was actively developing the first version of NDS. They had some problems with their implementation too.

My results were decidedly inconclusive. There was a noise factor in my data that I was not able to isolate and managed to drown out what differences there were between my optimized and non-optimized runs (in hindsight I needed larger tables by an order of magnitude or more). My analysis paper was largely an admission of failure. So when I got an A on the project I was confused enough I went to the professor and asked how this was possible. His response?

"Once I realized you got it working at all, that's when you earned the A. At that point the paper didn't matter."Dude. PVM is a message passing architecture, like most distributed systems. So yes, distributed systems are my thing. And they're talking about doing this on the motherboard! How cool is that?

Both Linux and Windows are adopting more message-passing architectures in their internal structures, as they scale better on highly parallel systems. In Linux this involved reducing the use of the Big Kernel Lock in anything possible, as invoking the BKL forces the kernel into single-threaded mode and that's not a good thing with, say, 16 cores. Windows 7 involves similar improvements. As more and more cores sneak into everyday computers, this becomes more of a problem.

An operating system working without the assumption of shared memory is a very different critter. Operating state has to be replicated to each core to facilitate correct functioning, you can't rely on a common memory address to handle this. It seems that the form of this state is key to performance, and is very sensitive to microarchitecture changes. What was good on a P4, may suck a lot on a Phenom II. The use of a per-core kernel allows the optimal structure to be used on each core, with changes replicated rather than shared which improves performance. More importantly, it'll still be performant 5 years after release assuming regular per-core kernel updates.

You'd also be able to use the 1.75GB of GDDR3 on your GeForce 295 as part of the operating system if you really wanted to! And some might.

I'd burble further, but I'm sick so not thinking straight. Definitely food for thought!

Thursday, August 06, 2009

Another nice How-To

Setting up Novell LDAP Libraries for C#

Another one of those things I went, "Ooh! USEFUL! Oh wait, we don't care any more. Drat. I bet I can blog that, though." So I am.

Use VisualStudio for developing applications (we did)? Need to talk to eDirectory? Why not use LDAP and Novell's tools for doing so! We've used their elderly ActiveX controls to do great things, and this should to about half of what we do with those. File manipulations will need to be another library, though.

Update: And how to set it up to use SSL. It requires Mono.

Friday, June 12, 2009

Explaining LDAP.

"How would you explain LDAP to a sysadmin who'd've heard of it, but not interacted with it before."

My first reaction illustrates my own biases rather well. "How could they NOT have heard of it before??" goes the rant in my head. Active Directory, choice of enterprise Windows deployments everywhere, includes both X500 and LDAP. Anyone doing unified authentication on Linux servers is using either LDAP or WinBind, which also uses LDAP. It seems that any PHP application doing authentication probably has an LDAP back end to it1. So it seems somewhat disingenuous to suppose a sysadmin who didn't know what LDAP was and could do.

But then, I remind myself, I've been playing with X500 directories since 1996 so LDAP was just another view on the same problem to me. Almost as easy as breathing. This proposed sysadmin probably has been working in a smaller shop. Perhaps with a bunch of Windows machines in a Workgroup, or a pack of Linux application servers that users don't generally log in to. It IS possible to be in IT and not run into LDAP. This is what makes this particular question an interesting challenge, since I've been doing it long enough that the definition is no longer ready to my head. Unfortunately for the person I'm about to info-dump upon, I get wordy.

LDAP.... Lightweight Directory Access Protocol. It came into existence as a way to standardize TCP access to X500 directories. X500 is a model of directory service that things like Active Directory and Novell eDirectory/NDS implemented. Since X500 was designed in the 1980's it is, perhaps, unwarrantedly complex (think ISDN), and LDAP was a way to simplify some of that complexity. Hence the 'lightweight".Yeah, I get wordy.

LDAP, and X500 before it, are hierarchical in organization, but doesn't have to be. Objects in the database can be organized into a variety of containers, or just one big flat blob of objects. That's part of the flexibility of these systems. Container types vary between directory implementation, and can be an Organizational Unit (OU=), a DNS domain (DC=), or even a Cannonical Name (CN=), if not more. The name of an object is called the Distinguished Name (DN), and is composed of all the containers up to root. An example would be:

CN=Fred,OU=Users,DC=organization,DC=edu

This would be the object called Fred, in the 'Users' Organizational Unit, which is contained in the organization.edu domain.

Each directory has a list of classes and attributes allowable in the directory, and this is called a Schema. Objects have to belong to at least one class, and can belong to many. Belonging to a class grants the object the ability to define specific attributes, some of which are mandatory similar to Primary Keys in database tables.

Fred is a member of the User class, which itself inherits from the Top class. The Top class is the class that all other classes inherit from, as it defines the bare minimum attributes needed to define an object in the database. The User class can then define additional attributes that are distinct to the class, such as "first name", "password", and "groupMembership".

The LDAP protocol additionally defines a syntax for searching the directory for information. The return format is also defined. Lets look at the case of an authentication service such as a web-page or Linux login.

A user types in "fred" at the Login prompt of an SSH login to a linux server. The linux server then asks for a password, which the user provides. The Pam back-end then queries the LDAP server for objects of class User named "fred", and gets one, located at CN=Fred,OU=Users,DC=organization,DC=edu. It then queries the LDAP server for objects of class Group that are named CN=LinuxServerAccess,OU=Servers,DC=Organization,DC=EDU, and pulls the membership attributes. It finds that Fred is in this group, and therefore allowed to log in to that server. It then makes a third connection to the LDAP server and attempts to authenticate as Fred, with the password Fred supplied at the SSH login. Since Fred did not fat finger his password, the LDAP server allows the authenticated login. The Linux server detects the successfull login, and logs out of LDAP, finally permiting Fred to log in by way of SSH.

As I said before, these databases can be organized any which way. Some are organized based on the Organizational Chart of the organization, not with all the users in one big pile like the above example. In that case, Fred's distinguished-name could be as long as:

CN=Fred,ou=stuhelpdesk,ou=DesktopSupport,ou=InfoTechSvcs,ou=AcadAffairs,dc=organization,dc=edu

How to organize the directory is up to the implementers, and is not included in the standards.

The higher performing LDAP systems, such as the systems that can scale to 500,000 objects or higher, tend to index their databases in much the same way that relational databases do. This greatly speeds up common searches. Searching for an object's location is often one of the fastest searches an LDAP system can perform. Because of this LDAP very frequently is the back end for authentication systems.

LDAP is in many ways a speciallized identity database. If done right, on identical hardware it can easilly outperform even relational-databases in returning results.

Any questions?

1: Yes, this is wrong. MySQL contains a lot, if not most, of these PHP-application logins on the greater internet. I said I had my biases, right?

Monday, May 11, 2009

Rebuilds and nwadmin

Happily for me, this is a procedure I can do without having to look things up in the Novell KB. This is part of the reason the letters "CNE" follow my name. The procedure is pretty straight-forward and I've done it before.

- Remove dead server's objects from the tree

- Designate a new server as the Master for any replica this server was the master of (all of them, as it happened)

- Install server fresh

As for the install... this server is an HP BL20P G3, which means I used the procedure I documented a while back (Novell, local copy). A few minor steps changed (the INSERT Linux I used then now correctly handles SmartArray cards), but otherwise that's what I did. Still works.

For a wonder, our SSL administrator still had the custom SSL certificate we created for this server three years ago. That saved me the step of creating a CSR and setting up all the Subject Alternate Names we needed.

And today I fired up NWADMIN for the first time in not nearly long enough to associate the SLP scope to this server, since it was one of our two DA's. I could probably have done the same thing in iManager with "Other" attributes, but... why risk not getting all the right attributes associated when I have a tool that has all the built-in rules. This is the one thing that I still have NWAdmin around for. SLP-on-NetWare management.

Friday, April 03, 2009

Open-sourcing eDirectory?

However... it isn't the RSA technology that allows eDirectory to scale as far as it does. To the best of my knowledge, that's pure Novell IP, based on close to 20 years of distributed directory experience. The RSA stuff is used in the password process, specifically the NDS Password, as well as authenticating eDirectory servers to the tree and each other. The RSA code is a key glue to holding the directory together.

If Novell really wanted to, they could produce another directory that scales as far as eDirectory does. This directory would be fundamentally incompatible with eDir because it would have to be made without any RSA code, which eDirectory requires. This hypothetical open-source directory could scale as far as eDir does, but would have to use a security process that is also open-source.

This would take a lot of engineering on the part of Novell. The RSA stuff has been central to both NDS and eDir for all of those close to 20 years, and the dependency tree is probably very large. The RSA code even is involved in the NCP protocol that eDir uses to talk with other eDir servers, so a new network protocol would probably have to be created from scratch. At the pace Novell is developing software these days, this project would probably take 2-3 years.

Since it would take a lot of developer time, I don't see Novell creating an open-source eDir-clone any time soon. Too much effort for what'll essentially be a good-will move with little revenue generating potential. That's capitalism for you.

Wednesday, December 03, 2008

OES2 SP1 ships!

Monday, November 17, 2008

Signs and portents

Thus emboldened I checked around a few more places. NetWare 6.5 SP8 was in the list, and still is right now. As is Open Enterprise Server 2 SP1. Both have the public betas posted, though.

But 8.8.4 was there. I saw it. Must have been a test or something. All this tells me that OES2 SP1 (a.k.a. NW65SP8) is just around the corner. Since we were told back at BrainShare that Sp1 would be in the Q4 time-frame, it's about due.

Wednesday, October 15, 2008

OES2 SP1 (public beta) has been posted

I believe the NDA has lifted, but I'm not 100% on that. Will check. But, some of the new stuff in SP1:

- An AFP stack that doesn't suck. Or more specifically, an AFP stack that scales beyond 100 users and is eDirectory integrated.

- A new CIFS stack written by Novell, so it can scale well past the Samba limit.

- A migration toolkit in one UI, rather than a cluster of scripts.

- A new version of iFolder

- EDirectory integrated DNS/DHCP. But no eDir integrated SLP yet, open-source politics you know.

- IIRC a beta of eDir 8.8 SP4.

- The ability to put iPrint-for-Linux on NSS volumes (handy for Clustering).

- And lots more I can't remember off the top of my head.

One thing I think it is safe to say, is that even though it says "Beta4" on it, it's really a release-candidate. Only major bugs are getting quashed right now. UI freeze was a month or more ago, and strange, annoying behaviors may get "fixed in doc" rather than getting true fixes which will have to wait for SP2. Still report them anyway, since it'll go on the list to fix in the next SP.

Friday, July 25, 2008

Handling eDirectory core-files on linux

Packaging the Core

First and foremost, you already have the tool to package core files for delivery to Novell already on your system. TID3078409 describes the details of how to use 'novell-getcore.sh'. It is included on 8.7.3.x installations as well as 8.8.x installations.

Running it looks like this:

edirsrv1:~ # novell-getcore -b /var/opt/novell/eDirectory/data/dib/core.31448 /opt/novell/eDirectory/sbin/ndsd

Novell GetCore Utility 1.1.34 [Linux]

Copyright (C) 2007 Novell, Inc. All rights reserved.

[*] User specified binary that generated core: /opt/novell/eDirectory/sbin/ndsd

[*] Processing '/var/opt/novell/eDirectory/data/dib/core.31448' with GDB...

[*] PreProcessing GDB output...

[*] Parsing GDB output...

[*] Core file /var/opt/novell/eDirectory/data/dib/core.31448 is a valid Linux core

[*] Core generated by: /opt/novell/eDirectory/sbin/ndsd

[*] Obtaining names of shared libraries listed in core...

[*] Counting number of shared libraries listed in core...

[*] Total number of shared libraries listed in core: 72

[*] Corefile bundle: core_20080725_092227_linux_ndsd_edirsrv1

[*] Generating GDBINIT commands to open core remotely...

[*] Generating ./opencore.sh...

[*] Gathering package info...

[*] Creating core_20080725_092227_linux_ndsd_edirsrv1.tar...

[*] GZipping ./core_20080725_092227_linux_ndsd_edirsrv1.tar...

[*] Done. Corefile bundle is ./core_20080725_092227_linux_ndsd_edirsrv1.tar.gzOnce you have the packaged core, you can upload it to ftp.novell.com/incoming as part of your service-request.

Including More Data

If you're lucky enough to be able to cause the core file to drop on demand, or it just plain happens often enough that repetition isn't a problem, there is one more thing you can do to include better data in the core you ship to Novell. TID3113982 describes a setting you can add to the ndsd launch script (/etc/init.d/ndsd) that'll include more data. The TID describes what is being done pretty well. In essence, you're using an alternate malloc call that fails with better information than the normal one. You don't want to run with this set for very long, especially in busy environments, as it impacts performance. But if you have a repeatable core, the information it can provide is better than a 'naked' core. Setting MALLOC_CHECK_=2 is my recommendation.

Be sure to unset this once you're done troubleshooting. As I said, it can impact performance of your eDirectory server.

Monday, June 16, 2008

A good article on trustees

Labels: coolsolutions, edir, netware, novell, NSS, storage, sysadmin

Thursday, March 20, 2008

BrainShare Wednesday

That said, the new GroupWise WebAccess is gorgeous. I wish Exchange had their non-ActiveX pages look that good.

TUT175: RBAC: Avoiding the horror, getting past the hype

Mostly about IDM as it turned out. Only minimally interesting from an abstract viewpoint about roles in general.

TUT 277: Advanced eDirectory Configuration, new features, and tuning for performance

I learned a few things I didn't know, such as the fact that each object as an "AncestorList" attribute listing who their parent objects are. This apparently greatly speeds up searching. SP3, coming out this Summer, will have faster LDAP binds for a couple of reasons. Right now Novell is recommending 2 million objects as a reasonable maximum size for a partition for performance reasons.

And also they reiterated something I've heard before...

You know how back in the NetWare 4 days, we said to design your tree by geography at the first level, and then get to departments? Um, sorry about that. It was great back then, but for LDAP or IDM it really, really slows things down.Yep. I took my first class for my CNA when 'Green River' was just coming out, or was just out. So I remember that.

TUT221: iPrint on Linux, what Novell Support wants you to know

A nice session from a mainline support guy about the ways people don't do iPrint on linux correctly. We're not going there until pcounter can run in linux, so this is still somewhat abstract. But, nice to know.

- The reason that some print jobs render differently than direct-print jobs, is because of how Windows is designed. Direct-print jobs render with the 'local print provider', and iPrint jobs render with the 'network print provider'. This is a Microsoft thing, not an iPrint thing. You can duplicate it by setting up a microsoft IPP printer (assuming you're not mandating SSL like we are) and printing to the same printer with the same driver.

- The Manager on Linux doesn't use a Broker, it uses a 'driver store'.

- The Manager on NetWare doesn't always bind to the same broker. I didn't know that.

- It is recommended to have only one Broker, or one driver store per tree.

- Novell recommends using DNS rather than IP for your printer-agents, check your manager load scripts.

Labels: brainshare, edir, linux, microsoft, netware, novell, OES, pcounter, printing

Tuesday, March 18, 2008

BrainShare Tuesday

ATT326: Advanced Linux Troubleshooting

An ATT, therefore hard to summarize. But I learned about a few new commands I didn't know about before. Like strace. And vimdiff.

TUT130: Challenges in Storage I/O in Virtualization

Another nice one, but an emergency at work (printing down in a dorm, during finals week) distracted me heavily during the first half of it. Which resulted in the following note in my notes:

NPIV looks really nifty. Look into it.NPIV being how you can use fibre-channel zoning to zone off VM's, rather than HBA's. Highly useful. I also learned about a neat new thing called Virtual Fabrics. Virtual Fabrics work kind of like VLANS for fabrics. You can segregate your fabrics into fabrics that share hardware but nothing else. Handy if your, say, Solaris admins don't want you mucking about with their zoning, while saving money through consolidated hardware.

TUT216: OES2 SP1 Architectural Overview

There is a LOT of new stuff in SP1.

- It will include eDir 8.8.4 (8.8.3 will ship this summer sometime)

- NCP and eDir will be fully 64-bit

- OES2 SP1 will be based on SLES SP2, which will be releasing about the same time

- AFP Support

- AFP 3.1

- Uses Diffie-Helman 1 for password exchange, meaning the 8-character password problem is solved.

- Fully SMP-safe

- Has cross-protocol locking with NCP. CIFS doesn't have cross-protocol locking yet, but IIRC, Samba does

- Does not need LUM enabled users

- CIFS Support

- NTLMv1, but v2 is a possibility if enough people ask, so file those enhancement requests!!

- CIFS is separate from Samba, therefore can not be used in conjunction with Domain Services for Windows

- As with AFP, fully SMP safe

- EDir 8.8.4

- LDAP auditing enhanced

- "newer auth protocols", but they didn't say what.

TUT211: Enhanced Protocol Support in OES2 SP1

This is the session where they went into detail about the AFP and CIFS support. They said that netatalk, the existing AFP stack on Linux, gets really slow once you go over the 20 concurrent users. Whoa! I can soooo understand why Novell felt the need to make a new one.

- The 8 character password limit has been fixed! They now support DH1 for passing passwords.

- The 'afptcp' daemon can use one password protocol at a time, so you can only use DH1, or one of the other three I can't remember.

- Support for OSX 10.1 and 10.2 is scanty, and 10.5 is limited but users may not notice anyway.

- Passwords will be case sensitive.

- Kerberos will be in a future release

- Performance is faster than NetWare, partly due to the ability to multi-thread

- Can register services by way of SLP

- Only supports NSS for the time being, the other Linux file-systems will be a future feature.

- Can support 500 concurrent users, and 1000+ in the future. This fits our current AFP loads.

- We can configure more about how it works than we could on NetWare, such as how many worker threads to spawn.

- Has meaninful debug logs!

- Has a new command, 'afpstat' that works like 'netstat' for giving a snapshot of afp connections.

Tonight was the night formerly known as 'Sponsor Night,' but has a new name now that everyone who gets a booth is no longer a 'sponsor'. Some are sponsors, some are exhibiters. I can't keep track. Anyway, today was their party. "World of Novellcraft!" Homage to vid-gaming.

Lots of Wii, lots of Rock Band, some Halo, lots of women dressed in Renaissance Festival gear getting their pictures taken by the 90%+ male audience. I've blogged before about my ambivalence about Sponsor Night. I lasted until about 7, when I came back to the hotel.

Tomorrow I have an actual LUNCH BREAK in my schedule! Ooo! And

Labels: brainshare, edir, linux, netware, novell, OES, sysadmin

Monday, February 25, 2008

First OES2

It being done on a Pentium III 1.2GHz machine meant it took a while. But very little in the way of complication. The one hitch was that it changed the certificate the NLDAP server loads to the default, which I didn't catch until a certain service we wrote failed. But that was a very easy fix.

Tuesday, February 12, 2008

OpenID and eDirectory

The answer to that is, "not natively." At its very base, openID is a method of granting foreign security principals access to resources. There will have to be some form of middleware that translates 'joebob.vox.com' into 'ext-1612ba2.extref.org.tree' (or even "joebob.vox_com.extref.org.tree") in eDirectory, but once that translation is in place eDirectory will support openID just fine. Now that openID is getting serious traction this becomes more interesting. But natively? Not really.

That said, eDirectory is very well suited for being the identity store for an openID-enabled database. It scales freakishly far. This is exactly the sort of 'distributed identity' idea that Novell has pointed out at the last few BrainShares. Through this sort of distributed identity system is would be possible for two Universities to grant members in other organizations, with their own eDirectories, access to a web-server based collaboration system.

Labels: brainshare, edir, opinion

Friday, January 25, 2008

A needed patch.

The sorting problem happens when you have eDir 8.8 installed. Suddenly C1 starts sorting things by creation date rather than as you've ever seen it before. This is... confusing. ConsoleOne 1.3h helped some of it for us, but not all. And now, we have a patch!

Let ConsoleOne Sort Correctly!

Thursday, December 27, 2007

eDirectory certificate server changes

With Certificate Server 3.2 and later, in order to completely backup the Certificate Authority, it is necessary to back up the CRL database and the Issued Certificates database. On NetWare, these files are located in the sys:system\certserv directory.

For other platforms, both of these databases are located in the same directory as the eDirectory dib files. The defaults for these locations are as follows:

Windows: c:\novell\nds\dibfiles

Linux/AIX/Solaris: /var/opt/novell/edirectory/data/dib

These defaults can be changed at the time that eDirectory is installed.

The files to back up for the CRL database are crl.db, crl.lck, crl.01 and the crl.rfl directory. The files to back up for the Issue Certificates database are cert.db, cert.lck, cert.01, and the cert.rfl directory.

I didn't know about that directory. I also didn't know that the CA is publishing a certificate-revocation-list to sys:apache2\htdocs\crl\. Time to twiddle the backup jobs.

Thursday, December 20, 2007

eDir 8.8, Priority Sync

6.0 Priority SyncWhich sounds spiffy. Instant sync of passwords? I'm all for that. Then I remembered, 'wasn't that happening already? That's right, that's the "SYNC_IMMEDIATE" flag in schema.' And that's what's described in this older CoolSolutions article.

Priority Sync is a new feature in Novell® eDirectory 8.8™ that is complimentary to the current synchronization process in eDirectory. Through Priority Sync, you can synchronize the modified critical data, such as passwords, immediately.

You can sync your critical data through Priority Sync when you cannot wait for normal synchronization. The Priority Sync process is faster than the normal synchronization process. Priority Sync is supported only between two or more eDirectory 8.8 or later servers hosting the same partition.

6.1 Need for Priority Sync

Normal synchronization can take some time, during which the modified data would not be available on other servers. For example, suppose that in your setup you have different applications talking to the directory. You change your password on Server1. With normal synchronization, it is some time before this change is synchronized with Server2. Therefore, a user would still be able to authenticate to the directory through an application talking to Server2, using the old password.

In large deployments, when the critical data of an object is modified, changes need to be synchronized immediately. The Priority Sync process resolves this issue.

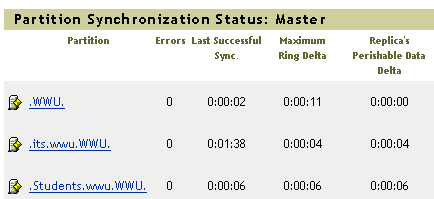

Looking at iMonitor I see this:

As 90-95% of our user objects are in either the root container or the students container, those are the statistics I'm interested in. The "maximum ring delta" number is very, very rarely over 30 seconds for these two partitions. With it being intersession right now, we're seeing some higher numbers than usual right now but it is still kept in close sync. As we have 24 hour computer labs, and a simple login causes several user-object attributes to update, we have a continual flow of directory changes. In our case, using Priority Sync would buy us a few seconds at most. We're not under any sort of regulatory mandate to do things 'instantly' so that isn't an issue, and our password-change process is well known to our end users for taking "up to 5 minutes".

Still, I like the idea even if it isn't a good fit for us.

Wednesday, December 19, 2007

eDir 8.8 is in

Whenever you do upgrades like this you always wonder if those balls you're juggling are tennis-balls or grenades. It took about a half hour per server and didn't have any significant hitches. The one problem that did surface is that the OES1-linux server's LDAP server had its certificate change from the one it was using to SSL CertificateDNS. This was not good, as that certificate doesn't have the subject-name we need and this caused some S/LDAP binds to fail due to SSL validation problems. That was an easy fix. The LDAP servers on the NetWare boxes didn't change.

This was a tennis-ball upgrade. So far.

We haven't turned on case-sensitive LDAP binds yet, but soon. Soon.

One unexpected side-effect of getting all three eDir servers upgraded to 8.8 like this, is that the Change Cache is now cleaned of those permanent residents we've had for ages. Woo!

Monday, December 17, 2007

Not dead.

On my list of things to do during the winter inter-session is to get eDir 8.8 deployed in the production tree. I just need to have ALL the servers in the tree (all, not just replica holders due to backlink updates) up and talking when I do the first one, and that could take some scheduling. This is the first step to OES2, which will be deployed on the eDir servers first.

As soon as I get some new hardware, since they're getting old.

Tuesday, October 23, 2007

Large eDirectory installs

Check it out.

Also? Novell has a real HTTP interface for the forums now.

Tuesday, September 25, 2007

The perils of a manual process

A word of note:

We do not use Novell Identity Management. We've built our own. When Novell first came out with DirXML 1.0, we already had the foundation of what we have right now. So, when I talk about IDM, I'm actually referring to our own self-built system not Novell's IDM.

To troubleshoot, I ran many tests. The longest one was to turn on dstrace logging on the root replica server, and push changes to the object. I'd push a change, watch the logs, then parse through the log for the user's object.

- Changing it via LDAP made a sync.

- Changing it via the IDM did not make a sync.

- Changing it via iManager made a sync.

- Changing it via ConsoleOne on the IDM server made a sync

To figure out what that is, I needed to sniff packets. PKTSCAN to the rescue. On the IDM server I turned off connections to all but the server holding the Master replicas of everything. Then on the master replica server I loaded PKTSCAN. I turned on sniffing, make the change, wait 5 seconds just to be safe, turn off the sniff, save the sniff, and load the sniff in Wireshark. The two cases I tested:

- Change the concurrent connections attribute through IDM

- Change the concurrent connections attribute through ConsoleOne on the IDM server

Looking at the details of T=WWU, I saw that it had an aux class associated with it. It was posixAccount. Thus, was I illuminated.

This particular sysadmin requested to have his account 'turned on for linux'. Which is code for having the posixAccount aux-class associated and the uid, gid, cn, and shell attributes added. This is still a manual process for us since requests are few and far between, though that is changing. It would seem that when I did it, I right-clicked on the wrong object. Whoopsie poo! Easily fixed, though.

I removed the aux-class from the tree root object, and suddenly... IDM changes started applying to the right object! Hooray! I think the IDM code was keying off of commonName rather than CN for some reason, which is why the aux-class got in the way.

Monday, September 24, 2007

Neat eDir trick

So, what the heck is it? From the documentation:

The event system extension allows the client to specify the events for which it wants to receive notification. This information is sent in the extension request. If the extension request specifies valid events, the LDAP server keeps the connection open and uses the intermediate extended response to notify the client when events occur. Any data associated with an event is also sent in the response. If an error occurs when processing the extended request or during the subsequent processing of events, the server sends an extended response to the client containing error information and then terminates the processing of the request.It's an extension to LDAP that Novell created to permit event monitoring. It monitors events in eDirectory, from object changes, to internal eDirectory statuses like obituary processing. For example, you can set up a connection and tell the LDAP server to tell you of all changes to the "member" attribute, and track all group modifications. Or track the "last login time" attribute, and create a robust login audit log.

Stuff like this is downright handy in identity management situations. If a change is made to "phoneNumber" in the Identity tree, that change can be trapped by the monitor, and propagated to the production eDir tree, Active Directory, and NIS+. What's now a batch process can be event based.

I'm not a java programmer so I'm limited in what *I* can do with it. However, I have coworkers who DO speak java, and can probably do wonderful things with it.

Thursday, August 23, 2007

Politics of passwords

When we got our new Vice Provost, we got a person who wasn't familiar with the history of our organization. These sorts of things are always a mixed blessing. In this case, he wanted to get password aging going. The previous incumbent had considered it, but the project was on perma-hold while he worked certain political issues. The new guy managed to make a convincing argument to the University leadership, and the fiat to do password aging came down from the very top. And So It Shall Be. And Is. As with our existing password sync systems, this is a system we built from internal components and uses no Novell IDM stuff at all. It works for us.

Yesterday we got asked to make certain that the Novell password was case-sensitive.

I thought it already was, as Universal Passwords are case sensitive. But testing showed that you could set a mixed-case password on an account, and log in to Novell with the lower-case password. It won't allow workstation login on domained PCs as the AD password is mixed-case. In the case of students who only ever login using web-services, they sometimes got a shock when using a lab for the first time and the password they'd been using for months didn't work.

There are two things working against us here.

- We did NOT set the "NMAS Authentication = On" setting in the Client we push. This means that while we are setting a universal password, none of our Novell Clients have been told to use them.

- LDAP logins to edir 8.7.3 use the NDS password by default first, and those are caseless. This means that anything using an LDAP bind, all of our web-sites that require authentication, will have a caseless password.

Looking at what would break if we turn NDS passwords off, I got a large list. We have some older servers in the tree (NetWare 6.0, and one lone NetWare 5.1 out there), and some console utilities would just plain break. Plus, at least one of us is still using ArcServe of an unknown version and I have zero clue if that would break if we remove NDS passwords (I'm guessing so, but I have no proof). Also, all older clients, such as the DOS boot disks used by our desktop group for imaging and any lingering Win9x we have out there, would break. Not Good.

The list of what'll break if we go to eDir 8.8 is shorter. As that allows the continued setting of the NDS Password, the amount of broken things out there is reduced. We'll have to put a specific dsrepair.nlm on all servers in the tree, but that is easier than working around breaking things. So, we're going to go to eDir 8.8.

This is not without its own problems, as some things DO still break. That lone NetWare 5.1 server will have to go. I've been assured that it is redundant and can go, but it'll need to ACTUALLY go. The NetWare 6.0 servers should be fine, as they're all at a DS rev that'll work with 8.8. Some of the 8.7.3 servers are still at 8.7.3.0 and should get updated for safety's sake. Also, all administrative workstations need to have NICI 2.7.x installed on them in order to understand the new eDir dialect, but that's a minor detail.

We won't be able to take advantage of some of the other nifty things eDir 8.8 introduces, as were still 95% NetWare when it comes to replica holders. Encrypted replication and multiple eDir instances will have to wait.

I HOPE to get eDir 8.8 in before class start, as the downtime required for DIB conversion is not trivial, and the first 4 weeks of class are always pretty hard on the DS servers due to updates.

Friday, September 22, 2006

Making data-junkies happy

Once Upon a Time this was Developer.novell.com only option, but somewhere along the line it snuck into the ConsoleOne directory. Take a look at...

ConsoleOne\1.2\reporting\bin\odbc.exe

Use and enjoy.

Tags: novell, edir

Monday, August 21, 2006

Removing replicas

Doesn't change anything, it's just fun to see all the blnk traffic in dstrace.

Tags: edir

Labels: edir

Friday, August 18, 2006

Fun stuff next Tuesday

- No direction yet from On High (which just changed people) regarding Novell-supplied operating systems.

- Two NetWare dependancies on those two servers

And then we learn how to support these things. With patches coming out for them every other day (thank you, Open Source) the patch-cycle management will need tweaking from what we do with our other systems (specifically, NetWare and Windows). Also needing input is how to get the other admins in, and how hosting eDir on Linux changes how we kick problems.

Exciting stuff. There are a few things that needed doing to get it into our environment. Firstly, Hera is a Primary Timesync source, which will obviously have to change. Second to that, we have a pair of service monitoring services that need to be informed how to re-query their bits. This server doesn't do SLP, so we don't have to worry about that problem quite yet.

I hope to post experiences after I have things in place. But the server is physically hosted not in my building, so I'll be away-from-desk the whole day I'm doing the install.

Tags: netware, edir