Wednesday, December 09, 2009

How DTV and HD Radio mirror security

One of the complaints levied against the now completed transition of US television broadcasts to pure digital is that the range reduces in a lot of cases. The same thing has been said about HD Radio, where the signal goes from crystal clear to nothing. Both are in the Seattle market, but up here in Bellingham we only have DTV, I don't know of any HD radio stations up here.

Which is sad, since I can occasionally pick up some of the Seattle stations if I'm north of town aways. The Chuckanut mountains (yes, that's their name!) get in the way of line-of-site while in town. However, there is no HD radio to be had. In large part this is because the Canadians don't have an HD radio standard approved and that's where most of our radio comes from.

Which is a long way from security, but the reasons for this are similar to something near and dear to any security maven's heart: two-factor security.

With analog TV and Radio signals, the human brain was very good at filtering out content from the noise. Noise is part and parcel to any analog RF system, even if you can't directly perceive it. Even listening to a very distant AM station, I can generally make out the content if I speak that language, or I already know the song. Those two things allow a much better hit-rate for predicting what sound will come next, which in turn enhances understanding. My assumptions about the communication method create a medium in which large amounts, perhaps a majority, of the consumed bandwidth is used in essentially check-sums.

Listening to a news-reader read text off a page. Call it 80 words per minute, and if you assume 5 character per word, that comes to 400 characters a minute. Add another 80-120 characters for various punctuation and white-space, assume 7-bit ASCII since special characters are generally hard to pronounce, and you have a bit-rate of between 56 and 65 bits per second. On a channel theoretically capable of orders of magnitude more then that. Those extra bits are insulation against noise. This is how you can understand said news-reader when your radio station is drowning in static.

TV is much the same. Back in the rabbit-ear era of my youth, we used to watch UHF stations through a fog of snow. It was just fine, we caught the meaning. It worked even better if the show was one we'd seen before, which helped fill in gaps.

Then along came the digital versions of these formats. And one thing was pretty clear, marginal signal meant a greatly reduced chance of getting anything at all. Instead of a slow fall-off of content, you had a sharp cliff where noise overcame the error correction in the signal processor hardware. However... so long as you were within the error correction thresholds, your listening/watching experience was crystal clear.

The something you are part of the security triumvirate of have/are/know is a lot like the experience with analog to digital conversion of TV and radio. The something you actually are is an analog thing, be it a fingerprint, the irides in your eye, the shape of your face, DNA sequence, or voice. The biometric device encodes this into a digital format that is presumably unique per individual. As we've had good experience with, the analog to digital conversion is a fundamentally noisy one, so this encoding has to include a 'within acceptable equivalency thresholds' factor.

It is this noise factor that is the basis of a whole category of attacks on these sensors. It is not sufficient to ensure that the data is a precise match, for some of these, such as voice or face, can change on a day to day basis, and others, such as finger or iris prints, can be faked very convincingly. The later of these is why the higher priced fingerprint sensors also do skin conductivity tests to ensure it is skin and not a gelatin imprint, among other 'live person' tests.

This makes the 'something you are' part of the triumvirate potentially the weakest. 'Something you know,' your password, is a very few bytes of information that has to be 100% correctly entered every time. 'Something you have' can be anything from a SecureID key-fob, to a smart-chiped card, which also requires 100% correctness. There is a fuzz factor for things like SecureID that use time as part of the process, so this is not quite 100%. However, 'something you are', is potentially quite a lot of data at a much lower precision than the other two.

There is a LOT of effort going in to developing algorithms that can perform the same distillation of content our brains do when listing to a news-reader on a distant AM station. You don't check the whole data returned by the finger reader, you just check (and store) the key identifiers inherent in all fingerprints, identifiers that are distilled from the whole data. The identifiers will get better over time as we gain better understanding of what this kind of data looks like. No matter how good we get with that, they'll still have uncertainty values assigned to them due to the analog/digital conversion.

Which is sad, since I can occasionally pick up some of the Seattle stations if I'm north of town aways. The Chuckanut mountains (yes, that's their name!) get in the way of line-of-site while in town. However, there is no HD radio to be had. In large part this is because the Canadians don't have an HD radio standard approved and that's where most of our radio comes from.

Which is a long way from security, but the reasons for this are similar to something near and dear to any security maven's heart: two-factor security.

With analog TV and Radio signals, the human brain was very good at filtering out content from the noise. Noise is part and parcel to any analog RF system, even if you can't directly perceive it. Even listening to a very distant AM station, I can generally make out the content if I speak that language, or I already know the song. Those two things allow a much better hit-rate for predicting what sound will come next, which in turn enhances understanding. My assumptions about the communication method create a medium in which large amounts, perhaps a majority, of the consumed bandwidth is used in essentially check-sums.

Listening to a news-reader read text off a page. Call it 80 words per minute, and if you assume 5 character per word, that comes to 400 characters a minute. Add another 80-120 characters for various punctuation and white-space, assume 7-bit ASCII since special characters are generally hard to pronounce, and you have a bit-rate of between 56 and 65 bits per second. On a channel theoretically capable of orders of magnitude more then that. Those extra bits are insulation against noise. This is how you can understand said news-reader when your radio station is drowning in static.

TV is much the same. Back in the rabbit-ear era of my youth, we used to watch UHF stations through a fog of snow. It was just fine, we caught the meaning. It worked even better if the show was one we'd seen before, which helped fill in gaps.

Then along came the digital versions of these formats. And one thing was pretty clear, marginal signal meant a greatly reduced chance of getting anything at all. Instead of a slow fall-off of content, you had a sharp cliff where noise overcame the error correction in the signal processor hardware. However... so long as you were within the error correction thresholds, your listening/watching experience was crystal clear.

The something you are part of the security triumvirate of have/are/know is a lot like the experience with analog to digital conversion of TV and radio. The something you actually are is an analog thing, be it a fingerprint, the irides in your eye, the shape of your face, DNA sequence, or voice. The biometric device encodes this into a digital format that is presumably unique per individual. As we've had good experience with, the analog to digital conversion is a fundamentally noisy one, so this encoding has to include a 'within acceptable equivalency thresholds' factor.

It is this noise factor that is the basis of a whole category of attacks on these sensors. It is not sufficient to ensure that the data is a precise match, for some of these, such as voice or face, can change on a day to day basis, and others, such as finger or iris prints, can be faked very convincingly. The later of these is why the higher priced fingerprint sensors also do skin conductivity tests to ensure it is skin and not a gelatin imprint, among other 'live person' tests.

This makes the 'something you are' part of the triumvirate potentially the weakest. 'Something you know,' your password, is a very few bytes of information that has to be 100% correctly entered every time. 'Something you have' can be anything from a SecureID key-fob, to a smart-chiped card, which also requires 100% correctness. There is a fuzz factor for things like SecureID that use time as part of the process, so this is not quite 100%. However, 'something you are', is potentially quite a lot of data at a much lower precision than the other two.

There is a LOT of effort going in to developing algorithms that can perform the same distillation of content our brains do when listing to a news-reader on a distant AM station. You don't check the whole data returned by the finger reader, you just check (and store) the key identifiers inherent in all fingerprints, identifiers that are distilled from the whole data. The identifiers will get better over time as we gain better understanding of what this kind of data looks like. No matter how good we get with that, they'll still have uncertainty values assigned to them due to the analog/digital conversion.

Monday, December 07, 2009

Account lockout policies

This is another area where how Novell and Microsoft handle a feature differ significantly.

Since NDS was first released back at the dawn of the commercial internet (a.k.a. 1993) Novell's account lockout policies (known as Intruder Lockout) were set-able based on where the user's account existed in the tree. This was done per Organizational-Unit or Organization. In this way, users in .finance.users.tree could have a different policy than .facilities.users.tree. This was the case in 1993, and it is still the case in 2009.

Microsoft only got a hierarchical tree with Active Directory in 2000, and they didn't get around to making account lockout policies granular. For the most part, there is a single lockout policy for the entire domain with no exceptions. 'Administrator' is subjected to the same lockout as 'Joe User'. With Server 2008 Microsoft finally got some kind of granular policy capability in the form of "Fine Grained Password and Lockout Policies."

This is where our problem starts. You see, with the Novell system we'd set our account lockout policies to lock after 6 bad passwords in 30 minutes for most users. We kept our utility accounts in a spot where they weren't allowed to lock, but gave them really complex passwords to compensate (as they were all used programatically in some form, this was easy to do). That way the account used by our single-signon process couldn't get locked out and crash the SSO system. This worked well for us.

Then the decision was made to move to a true blue solution and we started to migrate policies to the AD side where possible. We set the lockout policy for everyone. And we started getting certain key utility accounts locked out on a regular basis. We then revised the GPOs driving the lockout policy, removing them from the Default Domain Policy, creating a new "ILO polcy" that we applied individually to each user container. This solved the lockout problem!

Since all three of us went to class for this 7-9 years ago, we'd forgotten that AD lockout policies are monolithic and only work when specified in Default Domain Policy. They do NOT work per-user the way they are in eDirectory. By doing it the way we did, no lockout policies were being applied anywhere. Googling on this gave me the page for the new Server 2008-era granular policies. Unfortunately for us, it requires the domain to be brought to the 2008 Functional Level, which we can't do quite yet.

What's interesting is a certain Microsoft document that suggested settings of 50 bad logins every 30 minutes as a way to avoid DoSing your needed accounts. That's way more that 6 every 30.

Getting the forest functional level raised just got more priority.

Since NDS was first released back at the dawn of the commercial internet (a.k.a. 1993) Novell's account lockout policies (known as Intruder Lockout) were set-able based on where the user's account existed in the tree. This was done per Organizational-Unit or Organization. In this way, users in .finance.users.tree could have a different policy than .facilities.users.tree. This was the case in 1993, and it is still the case in 2009.

Microsoft only got a hierarchical tree with Active Directory in 2000, and they didn't get around to making account lockout policies granular. For the most part, there is a single lockout policy for the entire domain with no exceptions. 'Administrator' is subjected to the same lockout as 'Joe User'. With Server 2008 Microsoft finally got some kind of granular policy capability in the form of "Fine Grained Password and Lockout Policies."

This is where our problem starts. You see, with the Novell system we'd set our account lockout policies to lock after 6 bad passwords in 30 minutes for most users. We kept our utility accounts in a spot where they weren't allowed to lock, but gave them really complex passwords to compensate (as they were all used programatically in some form, this was easy to do). That way the account used by our single-signon process couldn't get locked out and crash the SSO system. This worked well for us.

Then the decision was made to move to a true blue solution and we started to migrate policies to the AD side where possible. We set the lockout policy for everyone. And we started getting certain key utility accounts locked out on a regular basis. We then revised the GPOs driving the lockout policy, removing them from the Default Domain Policy, creating a new "ILO polcy" that we applied individually to each user container. This solved the lockout problem!

Since all three of us went to class for this 7-9 years ago, we'd forgotten that AD lockout policies are monolithic and only work when specified in Default Domain Policy. They do NOT work per-user the way they are in eDirectory. By doing it the way we did, no lockout policies were being applied anywhere. Googling on this gave me the page for the new Server 2008-era granular policies. Unfortunately for us, it requires the domain to be brought to the 2008 Functional Level, which we can't do quite yet.

What's interesting is a certain Microsoft document that suggested settings of 50 bad logins every 30 minutes as a way to avoid DoSing your needed accounts. That's way more that 6 every 30.

Getting the forest functional level raised just got more priority.

Labels: edir, microsoft, netware, novell, passwords, security, sysadmin

Thursday, November 12, 2009

Passwords

Over the years I've heard variations on this complaint:

But that doesn't address the complaint above, merely shows the effects of this attitude. While it may be true that you work on nothing confidential, you still have one thing near and dear to your heart that you do care about; Identity. Especially with the advent of web-based Enterprise email, this is a very important thing. While it is trivial to impersonate an email address, it carries far more weight when that email is delivered from our servers. What's more, the ability to reply to legitimate emailas you is something you don't want. And finally, I don't know a single person that fails to have at least some personal correspondance in their work mailboxes, even if it only exists in the trash folder. That information may still be retrievable by an FOIA filing, but the generation of information does not, and generation of information is what you allow by having your password compromised.

We mean that. We don't allow managers to have departed employee's passwords for the same reason. Happily these sorts of gripes are becoming ever less common as the lessons of Phishing come home to more and more people. But this gripe is one that is particular to the public sector, so many of you may not have heard it before.

"I don't need a secure password since everything I work on can be seen with a freedom-of-information-act filing anyway."In the run up to the internal lobbying effort that allowed us to start password aging and put password complexity rules into place, we ran L0phtcrack against our Windows domain passwords. The results were astounding. A crushingly large percentage of passwords were still set to ones well known to be used by the helpdesk during password resets, users had never gone back and changed their password after having it reset by said helpdesk. A not much surprising but still disheartening number was the percentage of passwords set to either "password" or "p@$$w04D". These results are what convinced upper management to push password complexity policies onto the unwilling masses.

But that doesn't address the complaint above, merely shows the effects of this attitude. While it may be true that you work on nothing confidential, you still have one thing near and dear to your heart that you do care about; Identity. Especially with the advent of web-based Enterprise email, this is a very important thing. While it is trivial to impersonate an email address, it carries far more weight when that email is delivered from our servers. What's more, the ability to reply to legitimate emailas you is something you don't want. And finally, I don't know a single person that fails to have at least some personal correspondance in their work mailboxes, even if it only exists in the trash folder. That information may still be retrievable by an FOIA filing, but the generation of information does not, and generation of information is what you allow by having your password compromised.

We mean that. We don't allow managers to have departed employee's passwords for the same reason. Happily these sorts of gripes are becoming ever less common as the lessons of Phishing come home to more and more people. But this gripe is one that is particular to the public sector, so many of you may not have heard it before.

Tuesday, June 30, 2009

Super users

Having been a 'super user' for most of my career, I do not have the same perspective other people do when it comes to interacting with corporate IT. Because of what I do, I see everything. That's part of my job, so that's what I see. I have to know it is there.

However, how each company handles their elevated privilege accounts varies. Some of it depends on what system you're working in, of course.

Take a Windows environment. I see three big ways to handle the elevated user problem:

What other methods have you seen in use?

However, how each company handles their elevated privilege accounts varies. Some of it depends on what system you're working in, of course.

Take a Windows environment. I see three big ways to handle the elevated user problem:

- One Administrator account, used by all admins. Each admin has a normal user account, and log in as Administrator for their adminly work.

- Advantages Only one elevated account to keep track of.

- Disadvantages Complete lack of auditing if there is more than one admin around. Also, unless said admin has two machines, or has a VM for adminly work, they're logged in as Administrator more often than they're logged in as themselves.

- One Administrator account, admins user accounts are elevated to Administrator. Each admin's normal account is elevated. Administrator is relegated to a glorified utility account, useful for backups, other automation, or if you need to leave a server logged in for some reason.

- Advantages Audit trail. Changes are done in the name of the actual admin who performed the change.

- Disadvantages These users really need to be exempted from any Identity Management system. Since there are only going to be a few of them, this may not matter. Also, these users need to treat these passwords like the Administrator password.

- Each admin gets two accounts, normal and elevated As with the above, Administrator is a glorified utility account. But each admin gets two accounts; a normal account for every day use (me.normal) and an elevated account (me.super) for functions that need that kind of access.

- Advantages Provides audit trail, and allows the admin's normal account to be subject to identity-management safely. Easy availability of 'normal' account allows faster troubleshooting of permissions issues (hard to check when you can see everything)

- Disadvantages Admin users are juggling two accounts again, with the same problems as option 1.

What other methods have you seen in use?

Friday, May 08, 2009

Password stealing

There has been some press lately about the University of California, Santa Barbara having assumed control of a Torpig botnet. They've put out a report, and it has been getting some attention. There is some good stuff in there, but I wanted to highlight one specific thing.

The Ars Technica review of it says it pretty directly:

This extends to other areas as well. I exceedingly rarely click the, "remember this password," button in anything I'm on. This includes things like the Gnome keyring. That kind of thing is not a good idea in general.

The closest I get is a text-file on one of these (now with linux support!), and even that is a compromise between having to memorize a lot of cryptographically secure passwords (long AND complex) and a least wince-worthy memory jogging method. I can still describe several attack methods that could compromise that file, not the least of which is a clipboard/keylogger, or even a simple file-sniffer running in the background that drives through any mounted USB sticks. But... for long work passwords I'll use maybe four or five times a year, but still have to know, it's a compromise.

There are still some passwords I'll never write down outside of a password field. Such as the god passwords, any password I use on a daily or even weekly basis (I use those often enough for true memorization), or passwords used for any kind of financial transaction. For those kinds of high-value passwords, convenience of memory prosthetics doesn't enter in to it.

The Ars Technica review of it says it pretty directly:

The researchers noted, too, that nearly 40 percent of the credentials stolen by Torpig were from browser password managers, and not actual login sessionsThe 'browser password managers' are the password managers built in to your browser-of-choice for the ease of logging in to sites. I have personally never, ever used them because the idea of saving my passwords like that gives me the creeps. Even if it is AES encrypted. However, the way to attack those repositories is not through grabbing the file it is through the browser itself. File-level security is only part of the game, even if it is the easiest to secure.

This extends to other areas as well. I exceedingly rarely click the, "remember this password," button in anything I'm on. This includes things like the Gnome keyring. That kind of thing is not a good idea in general.

The closest I get is a text-file on one of these (now with linux support!), and even that is a compromise between having to memorize a lot of cryptographically secure passwords (long AND complex) and a least wince-worthy memory jogging method. I can still describe several attack methods that could compromise that file, not the least of which is a clipboard/keylogger, or even a simple file-sniffer running in the background that drives through any mounted USB sticks. But... for long work passwords I'll use maybe four or five times a year, but still have to know, it's a compromise.

There are still some passwords I'll never write down outside of a password field. Such as the god passwords, any password I use on a daily or even weekly basis (I use those often enough for true memorization), or passwords used for any kind of financial transaction. For those kinds of high-value passwords, convenience of memory prosthetics doesn't enter in to it.

Tuesday, January 22, 2008

Distributed Identity (such as OpenID) and security

Distributed identity systems are hot these days. Open-ID has been around for a while, and Yahoo! just jumped on that bandwagon. Possibly to stick it to Microsoft, who is deploying LiveID. Blogger just started allowing non-Google logins for things like comments.

These systems work by splitting apart authentication (verify who you are) and authorization (what you're allowed to do). Single-Sign-On systems work this way as well, but these systems take that to a much greater scale. Once you've been authenticated by the trusted third party, you are authorized to access the specified resources. In the web domain this is easily handled through cookies.

I noticed this text on the LiveID page I linked to:

Lets take it a bit further. It would probably be easy to get LiveID working inside of SharePoint. Especially since a developer SDK has been released to do just that. This would permit LiveID's access into SharePoint. Handy for collaborating with colleges working for other companies or universities.

Now what if Microsoft managed to kerberize LiveID? That would make it possible to use LiveID to log in against any Kerberos enabled service, as well as almost anything ActiveDirectory enabled. It'd probably take a tree-level (or maybe domain-level) trust established to the foreign tree (LiveID in this case) to make it work, but it could be done. Use LiveID to log into Exchange with Outlook, or map a share. Use your corporate login to work on your Partner's ordering system.

This scares me. In principle, not just because it's Microsoft I'm talking about here. Yes, it can be a great productivity enhancer, but the devil lurks in the failure modes. Identity theft is big business now, and anything that extends the reach of a single ID makes the ID that much more valuable. Social Security Numbers to us Americans are big deals since we can't renumber those, thus we have to protect them as hard as we can. Until we get a better handle on identity theft, these sorts of "One ID to rule them all," systems just make me wince.

These systems work by splitting apart authentication (verify who you are) and authorization (what you're allowed to do). Single-Sign-On systems work this way as well, but these systems take that to a much greater scale. Once you've been authenticated by the trusted third party, you are authorized to access the specified resources. In the web domain this is easily handled through cookies.

I noticed this text on the LiveID page I linked to:

Microsoft's Windows XP has an option to link a Windows user account with a Windows Live ID (appearing with its former names), logging users into Windows Live ID whenever they log into Windows.I did not know that. Shows what I pay attention to. What this tells me is that it is possible to synchronize your local WinXP login with a LiveID. This causes me to glower, because I inherently trust my local system differently than I do miscellaneous web services. Yes, the authenticator is the piece I need to worry about as it is how I get to prove I'm me, and that's just in one spot. But still, one compromised account (my LiveID account) and everything is shot.

Lets take it a bit further. It would probably be easy to get LiveID working inside of SharePoint. Especially since a developer SDK has been released to do just that. This would permit LiveID's access into SharePoint. Handy for collaborating with colleges working for other companies or universities.

Now what if Microsoft managed to kerberize LiveID? That would make it possible to use LiveID to log in against any Kerberos enabled service, as well as almost anything ActiveDirectory enabled. It'd probably take a tree-level (or maybe domain-level) trust established to the foreign tree (LiveID in this case) to make it work, but it could be done. Use LiveID to log into Exchange with Outlook, or map a share. Use your corporate login to work on your Partner's ordering system.

This scares me. In principle, not just because it's Microsoft I'm talking about here. Yes, it can be a great productivity enhancer, but the devil lurks in the failure modes. Identity theft is big business now, and anything that extends the reach of a single ID makes the ID that much more valuable. Social Security Numbers to us Americans are big deals since we can't renumber those, thus we have to protect them as hard as we can. Until we get a better handle on identity theft, these sorts of "One ID to rule them all," systems just make me wince.

Thursday, December 20, 2007

eDir 8.8, Priority Sync

One of the things that grabbed by attention with 8.8 is 'priority sync'. The documentation has an overview of it:

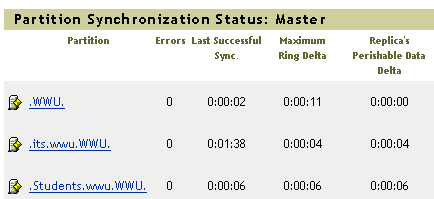

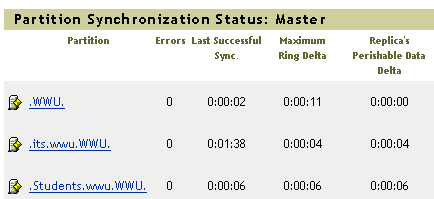

Looking at iMonitor I see this:

As 90-95% of our user objects are in either the root container or the students container, those are the statistics I'm interested in. The "maximum ring delta" number is very, very rarely over 30 seconds for these two partitions. With it being intersession right now, we're seeing some higher numbers than usual right now but it is still kept in close sync. As we have 24 hour computer labs, and a simple login causes several user-object attributes to update, we have a continual flow of directory changes. In our case, using Priority Sync would buy us a few seconds at most. We're not under any sort of regulatory mandate to do things 'instantly' so that isn't an issue, and our password-change process is well known to our end users for taking "up to 5 minutes".

Still, I like the idea even if it isn't a good fit for us.

6.0 Priority SyncWhich sounds spiffy. Instant sync of passwords? I'm all for that. Then I remembered, 'wasn't that happening already? That's right, that's the "SYNC_IMMEDIATE" flag in schema.' And that's what's described in this older CoolSolutions article.

Priority Sync is a new feature in Novell® eDirectory 8.8™ that is complimentary to the current synchronization process in eDirectory. Through Priority Sync, you can synchronize the modified critical data, such as passwords, immediately.

You can sync your critical data through Priority Sync when you cannot wait for normal synchronization. The Priority Sync process is faster than the normal synchronization process. Priority Sync is supported only between two or more eDirectory 8.8 or later servers hosting the same partition.

6.1 Need for Priority Sync

Normal synchronization can take some time, during which the modified data would not be available on other servers. For example, suppose that in your setup you have different applications talking to the directory. You change your password on Server1. With normal synchronization, it is some time before this change is synchronized with Server2. Therefore, a user would still be able to authenticate to the directory through an application talking to Server2, using the old password.

In large deployments, when the critical data of an object is modified, changes need to be synchronized immediately. The Priority Sync process resolves this issue.

Looking at iMonitor I see this:

As 90-95% of our user objects are in either the root container or the students container, those are the statistics I'm interested in. The "maximum ring delta" number is very, very rarely over 30 seconds for these two partitions. With it being intersession right now, we're seeing some higher numbers than usual right now but it is still kept in close sync. As we have 24 hour computer labs, and a simple login causes several user-object attributes to update, we have a continual flow of directory changes. In our case, using Priority Sync would buy us a few seconds at most. We're not under any sort of regulatory mandate to do things 'instantly' so that isn't an issue, and our password-change process is well known to our end users for taking "up to 5 minutes".

Still, I like the idea even if it isn't a good fit for us.

Thursday, August 23, 2007

Politics of passwords

It has been a common theme here for some years now to increase our password security. Two years ago (or was it three?) we rolled out Universal Passwords in an effort to gain more flexibility in the passwords we support. We've had password sync between Novell, AD, and Solaris for y-e-a-r-s, so our users have grown used to single sign-on. I've talked a couple of times about password complexity and how it works in a multiple system environment, twice in October (the 16th, and 17th). I've even talked about why Novell had to resort to Universal Passwords, because the NDS Password was too secure.

When we got our new Vice Provost, we got a person who wasn't familiar with the history of our organization. These sorts of things are always a mixed blessing. In this case, he wanted to get password aging going. The previous incumbent had considered it, but the project was on perma-hold while he worked certain political issues. The new guy managed to make a convincing argument to the University leadership, and the fiat to do password aging came down from the very top. And So It Shall Be. And Is. As with our existing password sync systems, this is a system we built from internal components and uses no Novell IDM stuff at all. It works for us.

Yesterday we got asked to make certain that the Novell password was case-sensitive.

I thought it already was, as Universal Passwords are case sensitive. But testing showed that you could set a mixed-case password on an account, and log in to Novell with the lower-case password. It won't allow workstation login on domained PCs as the AD password is mixed-case. In the case of students who only ever login using web-services, they sometimes got a shock when using a lab for the first time and the password they'd been using for months didn't work.

There are two things working against us here.

Looking at what would break if we turn NDS passwords off, I got a large list. We have some older servers in the tree (NetWare 6.0, and one lone NetWare 5.1 out there), and some console utilities would just plain break. Plus, at least one of us is still using ArcServe of an unknown version and I have zero clue if that would break if we remove NDS passwords (I'm guessing so, but I have no proof). Also, all older clients, such as the DOS boot disks used by our desktop group for imaging and any lingering Win9x we have out there, would break. Not Good.

The list of what'll break if we go to eDir 8.8 is shorter. As that allows the continued setting of the NDS Password, the amount of broken things out there is reduced. We'll have to put a specific dsrepair.nlm on all servers in the tree, but that is easier than working around breaking things. So, we're going to go to eDir 8.8.

This is not without its own problems, as some things DO still break. That lone NetWare 5.1 server will have to go. I've been assured that it is redundant and can go, but it'll need to ACTUALLY go. The NetWare 6.0 servers should be fine, as they're all at a DS rev that'll work with 8.8. Some of the 8.7.3 servers are still at 8.7.3.0 and should get updated for safety's sake. Also, all administrative workstations need to have NICI 2.7.x installed on them in order to understand the new eDir dialect, but that's a minor detail.

We won't be able to take advantage of some of the other nifty things eDir 8.8 introduces, as were still 95% NetWare when it comes to replica holders. Encrypted replication and multiple eDir instances will have to wait.

I HOPE to get eDir 8.8 in before class start, as the downtime required for DIB conversion is not trivial, and the first 4 weeks of class are always pretty hard on the DS servers due to updates.

When we got our new Vice Provost, we got a person who wasn't familiar with the history of our organization. These sorts of things are always a mixed blessing. In this case, he wanted to get password aging going. The previous incumbent had considered it, but the project was on perma-hold while he worked certain political issues. The new guy managed to make a convincing argument to the University leadership, and the fiat to do password aging came down from the very top. And So It Shall Be. And Is. As with our existing password sync systems, this is a system we built from internal components and uses no Novell IDM stuff at all. It works for us.

Yesterday we got asked to make certain that the Novell password was case-sensitive.

I thought it already was, as Universal Passwords are case sensitive. But testing showed that you could set a mixed-case password on an account, and log in to Novell with the lower-case password. It won't allow workstation login on domained PCs as the AD password is mixed-case. In the case of students who only ever login using web-services, they sometimes got a shock when using a lab for the first time and the password they'd been using for months didn't work.

There are two things working against us here.

- We did NOT set the "NMAS Authentication = On" setting in the Client we push. This means that while we are setting a universal password, none of our Novell Clients have been told to use them.

- LDAP logins to edir 8.7.3 use the NDS password by default first, and those are caseless. This means that anything using an LDAP bind, all of our web-sites that require authentication, will have a caseless password.

Looking at what would break if we turn NDS passwords off, I got a large list. We have some older servers in the tree (NetWare 6.0, and one lone NetWare 5.1 out there), and some console utilities would just plain break. Plus, at least one of us is still using ArcServe of an unknown version and I have zero clue if that would break if we remove NDS passwords (I'm guessing so, but I have no proof). Also, all older clients, such as the DOS boot disks used by our desktop group for imaging and any lingering Win9x we have out there, would break. Not Good.

The list of what'll break if we go to eDir 8.8 is shorter. As that allows the continued setting of the NDS Password, the amount of broken things out there is reduced. We'll have to put a specific dsrepair.nlm on all servers in the tree, but that is easier than working around breaking things. So, we're going to go to eDir 8.8.

This is not without its own problems, as some things DO still break. That lone NetWare 5.1 server will have to go. I've been assured that it is redundant and can go, but it'll need to ACTUALLY go. The NetWare 6.0 servers should be fine, as they're all at a DS rev that'll work with 8.8. Some of the 8.7.3 servers are still at 8.7.3.0 and should get updated for safety's sake. Also, all administrative workstations need to have NICI 2.7.x installed on them in order to understand the new eDir dialect, but that's a minor detail.

We won't be able to take advantage of some of the other nifty things eDir 8.8 introduces, as were still 95% NetWare when it comes to replica holders. Encrypted replication and multiple eDir instances will have to wait.

I HOPE to get eDir 8.8 in before class start, as the downtime required for DIB conversion is not trivial, and the first 4 weeks of class are always pretty hard on the DS servers due to updates.

Tuesday, May 15, 2007

The sky has not fallen

Today is the day we're flipping the switch and expiring passwords that haven't been changed in X days. There have been a metric ton of emails about this, and we've notified everyone we know who hasn't changed their password (and we can tell who they are) many, many times. Of the 21,000 or so accounts, I think I heard that 3,000 hadn't changed passwords yet. Those 3K people will have their passwords expired randomly over the next two weeks.

This morning we haven't had a call from either Helpdesk! Either these people don't log in as often as we thought, more likely, or they haven't had any issues with the password change screen.

Here is hoping it keeps up!

This morning we haven't had a call from either Helpdesk! Either these people don't log in as often as we thought, more likely, or they haven't had any issues with the password change screen.

Here is hoping it keeps up!

Monday, October 16, 2006

Strong passwords in a multiple authentication environment

One of the challenges of coming up with a reasonable password complexity policy is taking into account the relative strengths and weaknesses of the operating environments those passwords will be used in. Different operating systems have different strengths and weaknesses when it comes to password strength. Different environments have different threat exposures.

The two biggest things to worry about for brute-force password problems are random guessing, and hash-grab-and-crack. I'm ignoring theft or social engineering for the moment, as plain old password complexity doesn't do a lot to address those issues. Random guessing is the reason intruder lockout was created. Hash-grab-and-crack is what pwdump1/2/3/4 was created to do, with offline processing.

Password guessing will work on any system, given sufficient time. Not all systems even permit grabbing the password hashes, like NDS passwords, where others are rather well known (/etc/shadow). Grabbing the password hashes is preferred, since it permits offline guessing of passwords that won't trip any intruder-lockout alarms.

As for OS-specific password issues, we have three different systems here at WWU. Our main student shell server is running Solaris8, so passwords longer than 8 characters are meaningless; only the first 8 characters count. Our eDirectory tree is running Universal passwords, so passwords of any length are usable. Our Windows environment is not restricted to NTLM2 which means we have NTLM password hashes stored; and in this era of RainbowTables any password shorter than 16 characters (of ANY character, regardless of char-set) is laughably easy to crack if you have the hash.

This leads us to strange cases. This password:

So, what are we to do? That's a good question. Solaris passwords prefer complexity over length, and Windows passwords prefer length over complexity. This would imply that the optimal password policy is one that mandates long (longer than 16 characters) complex (the usual rules) passwords. Solaris will only take the first 8 characters, so the complexity requirement needs to be beefy enough that the first 8 characters are cryptographically strong.

One of the first things a hacker does once they gain SYSTEM access on a windows box is dump the SAM list on that server. I've seen this done every time I've had to investigate a hacked server. When the machine being hacked is a domained machine, the threat to the domain as a whole increases. So far I haven't seen a hacker successfully dump the entire AD domain. On the other hand, one memorable case saw the SAM dump at 12:06am and a text-file containing the cleartext passwords was dumped including the local-administrator account (a password 10 characters long, three character sets, no dictionary words, in other words a good Solaris password) at 12:17am; clearly a Rainbow Table had been used to crack it that fast. This was almost two years ago.

One problem with long, complex passwords that are complex enough in the first 8 characters is the human memory. 8-10 characters is about as long as anyone can remember a gibberish password like "{BJ]+5Bf", and it'll take that person a while to learn it. Going the irregular-case and number-substitution route can add complexity, but cryptographically speaking not a lot. Password crackers like John the Ripper contains algorithms to replace "a" with "4" and "A", to make sure your super secret password "P45$w0r|)" is cracked within 1 minute. Yet something like "FuRgl1uv2" works out, as it contains bad spelling.

Never underestimate the cryptographic potential of bad spelling. Especially creative bad spelling.

We still haven't solved this one. We're working on upgrading Solaris to a version that'll take longer passwords, and the resultant migration that'll required. We know where we need to go, but getting the culture at WWU shifted so that such requirements won't end up with a user revolt and passwords on post-its is the problem. Two-factor is not viable for a number of reasons (cost, and web-access being the two top ones). Mandatory password rotation is something we only do in the 'high security' zone (banner), not something we do for our regular ole systems. It's a bad habit we're trying to break, but institutional inertia is in the way and that takes time to overcome.

If Microsoft decided to salt their NTLM hashes, and therefore render Rainbow Tables mostly useless, we wouldn't be in this mess. They've seen the light (NTLM2, and whatever Vista-server will bring out), but that won't help all the legacy settings out there. NTLM is already legacy, yet we have to keep it around for a number of reasons, right up there being Samba doesn't speak NTLM2.

Who knows, it may end up that what solves this for us is getting Solaris to take long passwords, rather than educating all of our users on what a good password looks like.

Tags: sysadmin, passwords

The two biggest things to worry about for brute-force password problems are random guessing, and hash-grab-and-crack. I'm ignoring theft or social engineering for the moment, as plain old password complexity doesn't do a lot to address those issues. Random guessing is the reason intruder lockout was created. Hash-grab-and-crack is what pwdump1/2/3/4 was created to do, with offline processing.

Password guessing will work on any system, given sufficient time. Not all systems even permit grabbing the password hashes, like NDS passwords, where others are rather well known (/etc/shadow). Grabbing the password hashes is preferred, since it permits offline guessing of passwords that won't trip any intruder-lockout alarms.

As for OS-specific password issues, we have three different systems here at WWU. Our main student shell server is running Solaris8, so passwords longer than 8 characters are meaningless; only the first 8 characters count. Our eDirectory tree is running Universal passwords, so passwords of any length are usable. Our Windows environment is not restricted to NTLM2 which means we have NTLM password hashes stored; and in this era of RainbowTables any password shorter than 16 characters (of ANY character, regardless of char-set) is laughably easy to crack if you have the hash.

This leads us to strange cases. This password:

1ÃÃb$R=0Is very, very secure in Solaris, but laughably easy in Windows. And this password:

0123456789abcefBubba2pAantzIs a very good Windows password, but laughably easy on Solaris.

So, what are we to do? That's a good question. Solaris passwords prefer complexity over length, and Windows passwords prefer length over complexity. This would imply that the optimal password policy is one that mandates long (longer than 16 characters) complex (the usual rules) passwords. Solaris will only take the first 8 characters, so the complexity requirement needs to be beefy enough that the first 8 characters are cryptographically strong.

One of the first things a hacker does once they gain SYSTEM access on a windows box is dump the SAM list on that server. I've seen this done every time I've had to investigate a hacked server. When the machine being hacked is a domained machine, the threat to the domain as a whole increases. So far I haven't seen a hacker successfully dump the entire AD domain. On the other hand, one memorable case saw the SAM dump at 12:06am and a text-file containing the cleartext passwords was dumped including the local-administrator account (a password 10 characters long, three character sets, no dictionary words, in other words a good Solaris password) at 12:17am; clearly a Rainbow Table had been used to crack it that fast. This was almost two years ago.

One problem with long, complex passwords that are complex enough in the first 8 characters is the human memory. 8-10 characters is about as long as anyone can remember a gibberish password like "{BJ]+5Bf", and it'll take that person a while to learn it. Going the irregular-case and number-substitution route can add complexity, but cryptographically speaking not a lot. Password crackers like John the Ripper contains algorithms to replace "a" with "4" and "A", to make sure your super secret password "P45$w0r|)" is cracked within 1 minute. Yet something like "FuRgl1uv2" works out, as it contains bad spelling.

Never underestimate the cryptographic potential of bad spelling. Especially creative bad spelling.

We still haven't solved this one. We're working on upgrading Solaris to a version that'll take longer passwords, and the resultant migration that'll required. We know where we need to go, but getting the culture at WWU shifted so that such requirements won't end up with a user revolt and passwords on post-its is the problem. Two-factor is not viable for a number of reasons (cost, and web-access being the two top ones). Mandatory password rotation is something we only do in the 'high security' zone (banner), not something we do for our regular ole systems. It's a bad habit we're trying to break, but institutional inertia is in the way and that takes time to overcome.

If Microsoft decided to salt their NTLM hashes, and therefore render Rainbow Tables mostly useless, we wouldn't be in this mess. They've seen the light (NTLM2, and whatever Vista-server will bring out), but that won't help all the legacy settings out there. NTLM is already legacy, yet we have to keep it around for a number of reasons, right up there being Samba doesn't speak NTLM2.

Who knows, it may end up that what solves this for us is getting Solaris to take long passwords, rather than educating all of our users on what a good password looks like.

Tags: sysadmin, passwords