It seems that anything that runs over TCP can be tunneled over HTTPS. This is not a good thing when it comes to network security. As an example of the kind of whack-a-mole this can result in, I give you the tale of SSH. With a twist.

Power User: Surfs web freely. It is good.

Corporate Overlords: "In the interests of corporate productivity, we will be blocking certain sites." Starts blocking Facebook, MySpace, Twitter, and all sorts of other popular time-wasters like espn.com.

Power User: Is thwarted. Hunts up an open proxy server on the net. Surfs Facebook in the clear again. It is good.

Corporate Overlords: Informs network security office, creating it from scratch if need be, that it has come to their attention that the blocks are being circumvented, and that This Needs To Stop. Make it no longer so.

Network Security: "Yessir, will do sir. Will need funds for this."

Corporate Overlords: Supplies funds.

Network Security: Adds known-proxies to the firewall block list.

Power User: Is thwarted. Googles harder, finds an open proxy not on the list. Unrestricted internet access restored. It is good.

Network Security: Subscribes to a service that supplies open-proxy block lists.

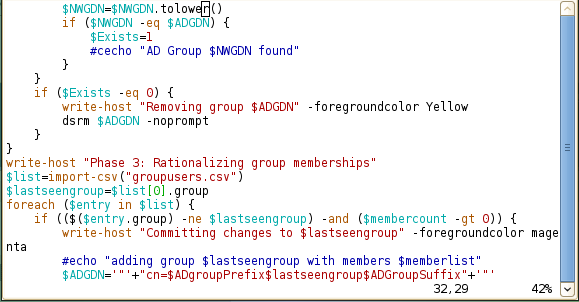

Power User: Is thwarted. Googles harder. Can't find accessible open proxy anywhere. Decides to make their own. Downloads and installs Squid on their home Linux server. Connects to home server over SSH, tunnels to squid over SSH. Unrestricted internet access restored. It is good.

Network Security: Notices uptick in TCP/22 traffic. Helpdesk tech gets busted for surfing YouTube while on the job. Machine dissection reveals SSH tunnel. Blocks TCP/22 at the router.

Power User: Is thwarted. When home next, moves SSH port to TCP/8080. Gets to work, uses TCP/8080 for SSH session. Unrestricted internet access restored. It is good.

Corporate Overlords: "In the interests of productivity, instant messaging clients not on the corporate approved lists are now banned."

Power User: Is not affected. Continues surfing in the clear. It is good.

Corporate Overlords: "In the interests of productivity, all unapproved non-HTTP off-network internet access is now banned."

Power User: Is thwarted. Moves SSH to TCP/80. Unrestricted internet access restored. It is good.

Network Security: Implements deep packet inspection on the firewall to make sure TCP/80 and TCP/443 traffic really is HTTP.

Power User: Is thwarted. Spends a week, gets

SSH-over-HTTP working. Unrestricted internet access restored. It is good.

Network Security: Implements mandatory HTTP proxy, possibly enforcing it via

WCCP.

Power User: Is thwarted, cache mucks up ssh sessions. Moves to HTTPS. Unrestricted internet access restored. It is good.

Network Security: Subscribes to a firewall block-list that lists broadband DHCP segments. Blocks all unapproved access to these IP blocks.

Power User: Is thwarted. Buys ClearWire WiMax modem. Attaches to work machine via 2nd NIC. Unrestricted internet access restored. It is very good, as access is faster than crappy corporate WAN. Should have done this much earlier.

Network Security: Developer busted for having a Verizon 3G USB modem attached to machine. Buys desktop inventorying software. Starts inventorying all workstations. Catches several others.

Power User: Sees notice about inventorying. Starts bringing home laptop to work to attach to ClearWire modem. Workstation is squeaky clean when inventoried. Uses USB stick to transfer files between both machines. Unrestricted internet access maintained. It is good.

Network Security: Starts random inspections of cubes for unauthorized networking and computing gear. Catches wide array of netbooks and laptops.

Power User: Hides ClearWire modem in cunningly constructed wooden plant-stand. Buys hot-key selectable KVM switch to hide in desk. Hides netbook in back of file-drawer, runs KVM cable to workstation keyboard/mouse/monitor. Runs USB hub to netbook, hides hub in plain sight next to keyboard. Is smug. Unrestricted internet access maintained. It is good.

Now that 3G and WiMax are coming out, it is a lot harder to maintain

productivity-related network blocks. The corporate firewall is no longer the sole gateway between users and their productivity destroying social networking sites. A Netbook with an integrated 3G modem in it will give them their fix. As will most modern SmartPhones these days.

As for

information leakage, that's another story all together. The defensive surface in an environment that includes ubiquitous wireless networking now includes the corporate computing hardware, not just the border network gear. This is where USB/FireWire attachment policies come into play. A workstation with two NICs can access a second network, so the desktop asset inventorying software needs to alarm when it discovers desktop machines with more than one IPed interface.

And yet... the only way to be

sure to catch the final end-game I lined out above, an air-gapped external network connection, is through physical searches of workspaces. That's a lot of effort to go to prevent information leakage, but if you're in that kind of industry where that's really important the effort will be invested. In such environments being caught breaking network policy like that can be grounds for termination. And yes, this is a lot of work for the security office.

All in all, it is easier to prevent information leakage than it is to prevent productivity losses due to internet-based goofing off. Behold the power of slack.

What does this have to do with SSH, which is what I titled this post about? You see, SSH is just a tool. It is a very useful tool for dealing with abstract policies of the http-restricting kind, but just a tool. It can get around all sorts of half-assed corporate block attempts. It has been the focus of many security articles over the years, and because of this it is frequently specifically included in corporate security policies.

Focusing policies on banning tools is short-sighted, as evidenced by the 3G/WiMax end-run around the corporate firewall. Since technology moves so fast, policies do need to be somewhat abstract in order to not be rendered invalid due to innovation. A policy banning the use of SSH to bypass the web filters does nothing for the person caught surfing using their own Netbook and their own 3G modem. A policy banning the use of any method of circumventing corporate block policy

does. A block list is an implementation of policy, not the policy itself.