Today I reconfigured the MSA1500 to run in Active/Active mode. While there, I also rearranged our disk arrays. We have 41, 500GB, 7.2K RPM drives in there. I created two, 20 disk Arrays, and filled each array with Raid 0+1 LUNs. This yielded 9TB of useful space. That extra drive will stay extra until we get an odd number of new drives.

Yes, a profligate waste of space but at least it'll be fast. It also had the added advantage of not needing to stripe in like Raid5 or Raid6 would have. This alone saved us close to two weeks flow time to get it back into service.

Another benefit to not using a parity RAID is that the MSA is no longer controller-CPU bound for I/O speeds. Right now I have a pair of writes, each effectively going to a separate controller, and the combined I/O is on the order of 100Mbs while controller CPU loads are under 80%. Also, more importantly, Average Command Latency is still in the 20-30ms range.

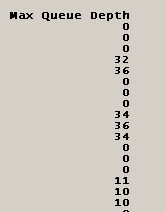

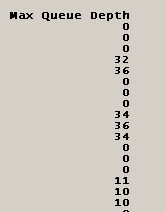

The limiting factor here appears to be how fast the controllers can commit I/O to the physical drives, rather than how fast the controllers can do parity-calcs. CPU not being saturated suggests this, but a "show perf physical" on the CLI shows the queue depth on individual drives:

The drives with a zero are associated with LUNs being served by the other controller, and thus not listed here. But a high queue depth is a good sign of I/O saturation on the actual drives themselves. This is encouraging to me, since it means we're finally, finally, after two years, getting the performance we need out of this device. We had to go to an active/active config with a non-parity RAID to do it, but we got it.

Yes, a profligate waste of space but at least it'll be fast. It also had the added advantage of not needing to stripe in like Raid5 or Raid6 would have. This alone saved us close to two weeks flow time to get it back into service.

Another benefit to not using a parity RAID is that the MSA is no longer controller-CPU bound for I/O speeds. Right now I have a pair of writes, each effectively going to a separate controller, and the combined I/O is on the order of 100Mbs while controller CPU loads are under 80%. Also, more importantly, Average Command Latency is still in the 20-30ms range.

The limiting factor here appears to be how fast the controllers can commit I/O to the physical drives, rather than how fast the controllers can do parity-calcs. CPU not being saturated suggests this, but a "show perf physical" on the CLI shows the queue depth on individual drives:

The drives with a zero are associated with LUNs being served by the other controller, and thus not listed here. But a high queue depth is a good sign of I/O saturation on the actual drives themselves. This is encouraging to me, since it means we're finally, finally, after two years, getting the performance we need out of this device. We had to go to an active/active config with a non-parity RAID to do it, but we got it.