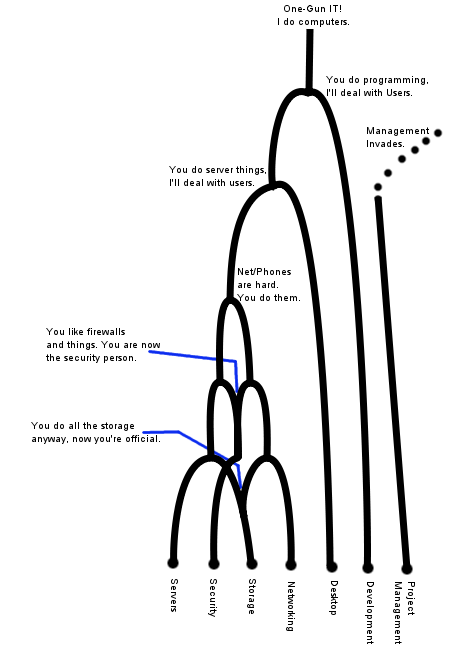

They're coming from an InfoSec point of view, but this fits into the overall IT framework rather well. I even remembered it in the chart I posted back in January:

A discrete Security department is one of the last things to break out of the pack, and that's something the SANS diary entry addresses. Between one-gun IT and a discrete security department (a "police department" in their terms) you get the volunteer fire-department. There may be one person who is the CSO, but there are no full-timers who do nothing but InfoSec stuff. When stuff happens, people from everywhere get drawn in. Sometimes it's the same people every time, and when a formal Security department is formed it'll probably be those people who are in it.

But, stand-by time:

This is time I've been calling "fire-watch" all along. Time when I have to be there in case something goes wrong, but I don't have anything else really going on. I spent a lot of 2010 in "fire-watch" thanks to the ongoing budget crisis at WWU and the impact it had on our project pace.Although it may sound like it means "stand around doing nothing," standby time is more like on-call or ready-to-serve time. Some organizations implement on-call time as that week or two that you're stuck with the pager so if anything happens after-hours you're the one that gets called. Otherwise known as the "sorry family, I can't do anything with you this week" time. As the organization grows, that will become less onerous as they move to a fully-staffed 24/7 structure with experienced people. That's not really what I mean by standby time.

Standby time is time that is set aside in the daily schedule that is devoted to incident-response. Most of the time it should focus on the first stage of incident-response, or Preparation. It's time spent keeping up to date on security news and events, updating documentation, and building tools and response processes. It's an interruptable time should an incident arise, but it's not interruptable for other meetings or projects.

Kevin Liston is advocating putting actual time on the schedule when you are doing the crisis watch as your primary duty. During this time anything else you do is the kind of thing that is immediately interruptable, such as studying or doing the otherwise low-priority grunt work of InfoSec. Or, you know, blogging.

Does this apply to the Systems Administration space?

I believe it does, and it follows a similar progression to the InfoSec field.

When a crisis emerges in a one-gun shop, well, you already know who is going to handle it.

In the mid-size shops like we had at WWU the person who handles the crisis is generally the one who discovers it first, a decidedly ad-hoc process.

In the largest of shops where they have a formal Network Operations Center they may have sysadmin staff on permanent standby just in case something goes wrong. Once Upon a Time, they were called 'Operators', I don't know what they're called these days. They're there for first line triage of anything that can go wrong, and they know who to call when something fails outside their knowledge-base.

Standby Time is useful, since it gives you a whack of time you'd otherwise be bored in, during which you can do such useful things as:

- Updating documentation

- Reviewing patches and approving their application

- Spreadsheeting for budget planning

- Reviewing monitoring system logs for anomalies any automated systems may have missed

- Reviewing the monitoring and alerting framework to make sure it makes sense

Or, those 'low priority' tasks we rarely seem to get to until it's too painful not to get around to it.

Not all sysadminly types need to be on the fire watch, but some do. In DevOps environments where the systems folk are neck deep in development, some of them may only be on it for a little while. In others where certain specialties that are rarely involved in incident-response are in evidence, such as Storage Administrators, they may never get on the front-line fire watch but may carry the 2nd/3rd tier pager.

Note, this is in addition to any helpdesk that may be in evidence. The person on fire watch will be the first responder in case something like a load-spike triggers a cascading failure among the front-end load-balanced web-servers. A fire-watch is more important for entities that have little application diversity and few internal users; things like esty, ebay, and amazon. It's less important for entities that have a lot of internal users and a huge diversity in internal systems, places like WWU. In these cases you can have a lot of things that can go wrong in little ways and who knows how to fix 'em is hard to track.

If nothing else, you can put "fire watch" on your calendar as an excuse to do the low-level tasks that need to get done while at the same time fending off meeting invites.