For some, every tweet is sacred. Each and every one is to be read, and caught up on if they've been away.

For some it's a stream of interesting, to be looked in upon when the whim strikes. There may be a list of really interesting people that gets checked more often.

For some it's ARG ARG NOISY GO AWAY ARG. This is why we have email.

For some sysadmin teams, every alert is sacred. To be acted upon the moment it arrives, and looked at when you've been away to be sure everything is handled.

For some sysadmin teams, the alerts are glanced at for suspicious patterns but otherwise ignored. Some really interesting ones may be forwarded by email-rules to phones.

For some sysadmin teams, the alerts are completely ignored. Problems are handled in email when other people notice them.

If you ever wondered how someone with a thousand twitter-follows can keep up, it's simple: they don't. It's lossy, it has to be. With that many follows you're dealing with a tweet every 30 seconds and you only keep up when you're bored. Or you make Lists of people you actually care about following, and only browse the master list when there isn't anything else going on.

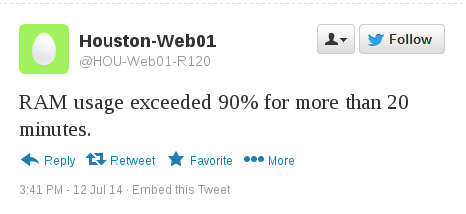

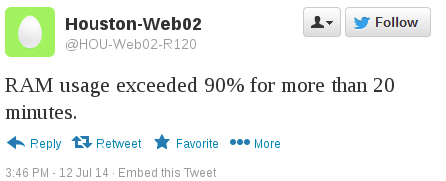

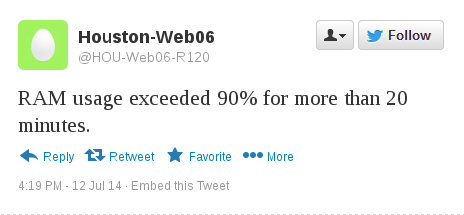

The same dynamic holds true for a system with 600 individual servers with a variety of applications installed on them. Even if you only turn on OS basics like CPU/RAM/Swap/Disk, your alert stream is going to be very noisy. And like twitter there is going to be a lot of the server equivalent of, "I just had dinner, that... was a lot of food" tweets.

So riviting. *thud*

When it comes to alerts you need to consider when you want to know something. Putting everything in email is a great way to ignore it, and maybe expose it to some rudimentary email-rule based noise-filters. But wouldn't it be better if that email stream were high quality? And you had an actual website or something you could query for the historical stuff? Yeah, that'd be great.

Wait, what? Crap. Why?

Here is a nasty truth: I don't give a damn about CPU/RAM/Swap/Disk. Not in an ACT NOW, MONKEY! kind of way, anyway. I care about that stuff for trending and for historical troubleshooting. Think about the things we want to know and when we want to know them. Once you have an idea about that, you can start defining alerts that will look more like a well curated twitter-feed you don't want to miss, and less like the Alerts folder with 1269 unread messages in it.

So how can you make your alert stream more like a well curated feed, and not the firehose of noise it likely is?

Rule 1: Not all monitorables need a defined alert.

Just because you can track it doesn't mean you need to figure out an alert threshold for it and figure out what text to put into the email/sms. Definitely keep track of it if you have an operational need, but don't try to bother humans with it unless you have a definite need. "I might want to know once," is not definite, it's paranoia. Some systems, especially single points of failure, really do need that kind of alerting so set it up. Yes, please tell me that the 6-figure router I have one of is having a high CPU event, I want to know that. But please don't tell me about high NIC usage on the main database when the backups are staging to the archive system.

While there are people who really will skim through 800 tweets over breakfast in order to catch up with what happened overnight, and there are sysadmins who will read all 800 messages in the Alerts folder over breakfast, the rest of us look at that and go "AAAG information overload!" And skimming? That's great for things you need to know about eventually, but absolute crap when you need to react RIGHT BLOODY NOW.

Rule 2: Not everyone treats alerts the same way you do. Account for that.

You may look at every alert as it arrives and determine if it needs action, but your peers may be more of the "only tell me if something is actually wrong" variety, and have a different definition of "actually wrong". Alert systems are supported by people, so how those people work needs to be dealt with. Come to a consensus, and keep maintaining it. If you're an alerts-over-breakfast type, your cube-mate may be a page-me-if-anything-breaks type. The two of you need to figure out common ground.

Rule 3: Spend the effort to build the kind of alerts you actually need.

The out-of-the-box alerts are almost always.... boring. I rarely, if ever, want to know about high CPU/RAM/Swap/Disk events the moment they happen. Some, like disk-space, I should be picking up on trending reports. Yes, we do have spikes we need to deal with (dumping core on a 768GB RAM box? Tell me), and root/c: drive filling, but that should be a targeted alert not one that goes on everything.

Another boring alert? Pingable. It backstops the actual thing I'm worried about, whether or not TCP/8443 is serving SSL and returns an HTTP/200 status code, but by itself isn't something I want to get an alert on. The big difference is if my monitoring system is smart enough to figure out the "IF !pingable THEN AlertSuppressDependentServices" logic. In that case, I really want pingable because I can trust that I know I have a whole fist of services down based on a single alert, and I'm not getting a storm of messages about the down services on the box.

Spend the time to build the alerts you actually want. If you're doing clustered services or horizontal scaling services you generally don't care much about single system outages, you care about whether or not the service is up and performing acceptably. These are harder alerts to build, but they're what you actually want in most cases.

Rule 4: Create different classes of alert based on urgency.

Some alerts are wake up a human right now alerts, and some are more "bother whoever is on duty right now" alerts. And some are "so long as someone gets to it within a few hours we're OK." This will probably require setting up some kind of on-call schedule with a push notice other than email, such as SMS or a mobile app. Email is good for a lot, but it only is as good as the built in filtering system's ability to isolate signal in a bunch of noise and forward to email-to-SMS gateways.

Sending everything to email and trusting each recipient has good enough mail filters to do the correct urgency handling doesn't scale. And isn't consistent.

Rule 4a: Format your alert text with 160 character-limits in mind.

The chances of your alerts ending up on mobile, filtered through SMS, is pretty high. Best to plan for that from the start.

Rule 5: Ask the right questions during post-mortems.

Post-mortem processes are there for a reason, and that's to do better next time. This is a perfect opportunity to identify improvements in your monitoring and alert systems! Some questions you should be asking:

- Did the monitoring system pick up the problem?

- Did we have an alert configured to notice the problem?

- Did we react to the monitoring system, a customer report, or a sysadmin with a bad feeling about this?

- What changes can we make to allow the alert system to notify us of this kind of problem?

- What changes can we make to allow us to notice this event building up so we can deal with it before it becomes a problem?

I have seen many, many cases where the monitoring system DID pick up the problem and DID alert us to it, but no one noticed because it was one alert in a pile of 50 that arrived in a given hour. We reacted when a customer asked us about why their stuff was down. What were the other 49 messages? A few were side-effects of the problem and the rest were routine CPU/RAM/Swap/Disk high-usage notices that we all stopped paying attention to.

Oops.

For a very high profile event that can have some serious consequences for a sysadmin team. You don't want those consequences.

Rule 6: Create some kind of trend-tracking system.

Trends allow you to get ahead of problems. It may be a weekly report with graphs of historical usage that gets mailed out for humans to look at and go, "Hm, that looks kinda bad," over. It may be an actual analytics systems that puts in trend-lines and helpful, "vSphere cluster PROD will be 100% RAM in 39.4 days" text. Or it may be a weekly recurring helpdesk ticket to have someone look at charts for an hour.

Whatever it is, you need something or someone to keep track of trends. Put all those monitorables you're not alarming on to good use and get ahead of the ball for a change. Figure out 3 months before you run out of disk-space that you're going to need to add more. Notice that the latest hotfix has increased RAM usage across the web cluster by 17%. These are not know-right-now things, they're the kind of thing that is alarming, but on a scale of weeks not minutes. You need that kind of alerting too!

It's time to cull your twitter alert stream.